Enterprise AI adoption moved fast. Speed mattered. Shipping mattered. Getting AI into production mattered. That phase is over.

Security leaders are now asking a harder question: whether the AI already embedded in security operations is safe, explainable, and aligned with how modern SOC teams actually work. The focus has shifted from adoption to trust, specifically explainability, governance, and operational fit.

That shift is visible at the executive level. In a recent CNBC discussion on enterprise AI strategy, Deloitte’s Steve Goldbach noted that CEOs are allocating roughly 11 percent of total revenue to transformation initiatives, often framed as 2026 priorities. Only about 30 percent of those efforts reach their intended goals. Nearly 70 percent fall short.

The failure pattern is familiar. Every January, gym memberships spike as people commit to sweeping change. By February, most of those plans fade. The problem is rarely intent or budget. It is the leap from zero to full transformation without a plan that fits real behavior.

AI-driven security programs are failing for the same reason.

When AI Adoption Outruns Security Operations

Security operations are already complex. Analysts move between dashboards, alerts, workflows, and data sources that promise visibility but demand constant interpretation. When AI is added without structure or understanding, complexity grows faster than clarity.

More alerts surface. More AI-generated summaries appear. And analysts spend more time validating outputs rather than responding to threats. This slows decision-making, analysis, and ultimately the ability to effectively respond to security concerns. While tooling looks advanced, outcomes degrade.

This gap explains why so many AI initiatives stall after early deployment.

The CNBC discussion highlighted progressive testing as a corrective measure. Executives described controlled rollouts, limited scope, and continuous validation against real operating conditions. AI features earn trust only when it proves value incrementally inside existing workflows. That discipline matters even more in cybersecurity, where mistakes translate directly into risk.

The Early Risks of Opaque AI in Cybersecurity

The current wave of enterprise AI large language models, brought real promise to optimize security operations. Faster analysis, automated triage, and generated workflows moved quickly from concept to production.

Many of these systems arrived as closed platforms with limited transparency.

Security teams were asked to trust decisions they could not explain. Organizations found themselves dependent on vendor-controlled AI systems with little visibility into how models behaved or what data shaped outcomes. That combination introduced new operational risk.

Three issues surfaced repeatedly:

Decision Explainability

When AI flags a threat, analysts must understand why. Without context, teams replace alert fatigue with verification fatigue.

Audit and Compliance Traceability

Regulators increasingly expect proof of how automated decisions are made. Models without traceability create compliance exposure.

Data Governance and Control

Vendor-hosted AI often requires exporting logs and telemetry into external infrastructure, raising concerns about ownership, retention, and oversight.

As AI usage scales, these risks compound and directly contribute to stalled or abandoned transformation efforts.

Why Context Determines Whether AI Actually Works

Security data is fragmented by design. Identity activity, endpoint telemetry, network traffic, and cloud logs rarely align cleanly. AI models trained on isolated datasets perform well in demonstrations but struggle when signals collide with daily noise during real incidents.

Context closes that gap.

Asset criticality, behavioral baselines, vulnerability exposure, and threat intelligence determine whether an alert matters. AI systems that do not have access to context force analysts to reconstruct meaning manually, erasing any potential efficiency gains. Similar to the lessons learned with Machine Learning, LLM analytics requires appropriate data preparation.

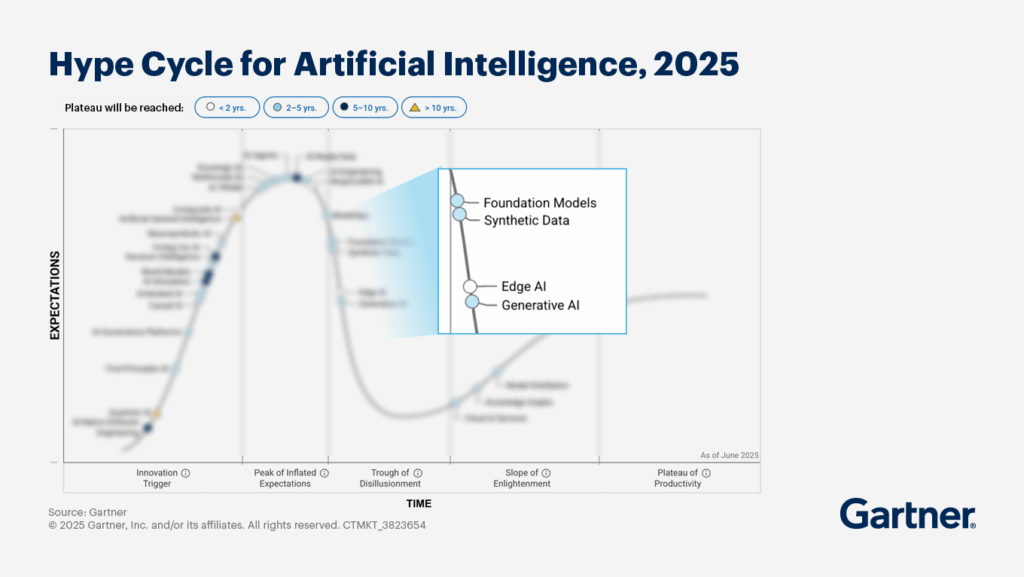

Industry research supports this point. Gartner has repeatedly noted that AI in security operations only delivers value when paired with contextual enrichment and workflow alignment. Intelligence that lives outside the analyst’s working surface remains theoretical, regardless of model sophistication.

This is why investment alone does not produce results. Like unused gym memberships, AI programs fail when ambition outpaces operational structure. Analysts will revert back to tried and true methods, as manual as they may be.

The Shift Toward Governed, Explainable Security AI

Organizations are recalibrating expectations. AI experimentation is widespread, but large-scale success remains limited. Roughly 62% of organizations are experimenting with AI agents, while only 23% have scaled them beyond pilots. The gap reflects growing recognition that AI must be governable to be trusted.

One visible change is the move toward private and controllable AI models. Instead of relying exclusively on opaque, vendor-hosted systems, organizations want frameworks they can inspect, fine-tune, and audit. Ownership of data and model behavior is becoming non-negotiable.

This shift also elevates data architecture. AI reliability depends on the pipeline feeding it. Transparent data frameworks allow teams to trace inputs, audit outputs, enforce retention policies, and detect misuse. Log management becomes foundational because, without visibility into data, explainable AI is impossible.

Security teams now expect AI to function as a first-class part of the SOC. Analysts need to see how conclusions are formed, where confidence weakens, and how results can be validated. AI that cannot be examined does not survive operational scrutiny.

Why Visibility Is the Starting Point For AI Governance

Generative AI in security only earns trust when teams understand what data it consumes, which signals it prioritizes, and how decisions are produced. That confidence depends on visibility across data flows and operational behavior.

Transparency means knowing what enters a model, how it is processed, and whether outputs align with expected patterns. It requires continuous monitoring of AI behavior and validation using the same context analysts rely on during investigations and audits.

It also means retaining control over sensitive operational data so governance policies can be enforced consistently. In practice, AI governance begins with data governance, and data governance starts with visibility.

From AI Resolutions to Operational Results

By 2026, the AI market has matured. The early surge of experimentation has given way to accountability. Security leaders measure success by outcomes, not deployments.

The lesson from failed transformations is clear. Progress does not come from rapid escalation. It comes from systems designed around how security teams actually operate. In cybersecurity, that design principle is context.

Organizations that succeed with AI in 2026 are those that built explainable, governable systems they can see, understand, and control. Not because AI requires it, but because security operations do.

See how Graylog 7.0 approaches explainable AI.

Follow Graylog on LinkedIn for insights on building security operations that scale with confidence.

VP of Product Management