After a long day, you sit down on the couch to watch your favorite episode of I Love Lucy on your chosen streaming platform. You decide on the episode where Lucy can’t keep up with the chocolates on the conveyor belt at the factory where she works. Without realizing it, you’re actually watching an explanation of how the streaming platform – and your security analytics tool – work.

Data streaming is the real-time processing and delivery of data. Just like those chocolates coming through on the conveyor belt, your security analytics and monitoring solution collects, parses, and normalizes data so that you can use it to understand your IT environment and potential threats to your security.

Using streaming data for cybersecurity may present some challenges, but it also drives the analytics models that allow you to improve your threat detection and incident response program.

What is streaming data?

Streaming data is a continuous flow of information processed in real-time that businesses can use to gain insights immediately. Since the data typically appears chronological order, organizations can apply analytics models to identify or predict changes.

The key characteristics of streaming data include:

- Continuous flow: non-stop real-time data processed as it arrives

- Sequential order: data elements in chronological order for consistency

- Timeliness: rapid access for time-sensitive decision-making and activity

Continuous streaming data enables security professionals to detect patterns and identify anomalies that may indicate a potential threat or incident. Most security telemetry is streaming data, like:

- Access logs

- Web application logs

- Network traffic logs

Streaming Data vs. Static Data

Streaming data and static data differ significantly in their characteristics and applications. While static data is fixed and collected at a single point in time, streaming data is continuous and time-sensitive, containing timestamps.

Timeliness

When using data for security use cases, timeliness is a key differentiator:

- Static data: fixed, point-in-time snapshot

- Streaming data: continuous, real-time, no defined end

Sources

For security professionals, this difference is often the one that makes managing and using security data challenging:

- Static data: typically fewer, defined sources

- Streaming data: large numbers of diverse geographic locations and technologies

Format

When managing security telemetry, format is often the most challenging difference that you need to manage:

- Static data: uniform and structured

- Streaming data: various formats, including structured, unstructured, and semi-structured

Analytics Use

The key difference that matters for security teams is how to use the data with analytics models:

- Static data: mostly historical insights

- Streaming data: predictive analytics and anomaly detection

What are the major components of a stream processor?

With a data stream processor, you can get timeline insights by analyzing and visualizing your security data.

Data stream management

Data stream management involves data storage, process, analysis, and integration so that you generate visualizations and reports. Typically, these technologies have:

- Data source layer: captures and parses data

- Data ingestion layer: handles the flow of data between source and processing layers

- Processing layer: filters, transforms, aggregates, and enriches data by detecting patterns

- Storage layer: ensures data durability and availability

- Querying layer: tools for asking questions and analyzing stored data

- Visualization and reporting layer: performs visualizations like charts and graphs to help generate reports

- Integration layer: connects with other technologies

Complex event processing

Complex event processing identifies meaningful patterns or anomalies within the data streams. The components of complex event processing include:

- Event Detection: Identifies significant occurrences within IoT data streams.

- Insight Extraction: Derives actionable information from detected events.

- Rapid Transmission: Ensures swift communication to higher layers for real-time action.

These functionalities are critical for real-time analysis, threat detection, and response.

Data collection and aggregation

Data collection and aggregation organizes the incoming data, normalizing it so that you can correlate various sources to extract insights. Real-time data analysis through streaming enhances an organization’s ability to detect and respond to cyber threats promptly, improving overall security postures. Continuous monitoring and strong security measures are pivotal to protect data integrity during transit.

Continuous logic processing

Continual logic processing underpins the core of stream processing architecture by executing queries on incoming data streams to generate useful insights. This subsystem is crucial for real-time data analysis, ensuring prompt insights essential for maintaining vigilance against potential cybersecurity threats.

What are the data streaming and processing challenges with cybersecurity telemetry?

Data streaming and processing come with several challenges for cybersecurity teams who deal with data heterogeneity that impacts their ability to detect and investigate incidents.

Data Volume and Diversity

Modern IT environments generate high volumes of data in disparate formats. For example, security analysts often struggle with the different formats across their logs, like:

Chronological order

Data streams enable you to use real-time data to promptly identify anomalies or detect security incidents. However, as the data streams in, you need to ensure that you can organize it chronologically, especially when you need to write your security incident report.

Scalability

The increasing volume of stream data necessitates that processing systems adapt dynamically to varying loads to maintain quality. For example, you may need to scale up your analytics – and therefore data processing requirements – when engaging in an investigation.

Benefits of data streaming and processing for cybersecurity teams

When you use real-time data streaming, you can move toward a proactive approach to cybersecurity, enabling real-time detections and faster incident response. Utilizing Data Pipeline Management can help you also save costs when routing data where you want it.

Infrastructure Cost Reduction

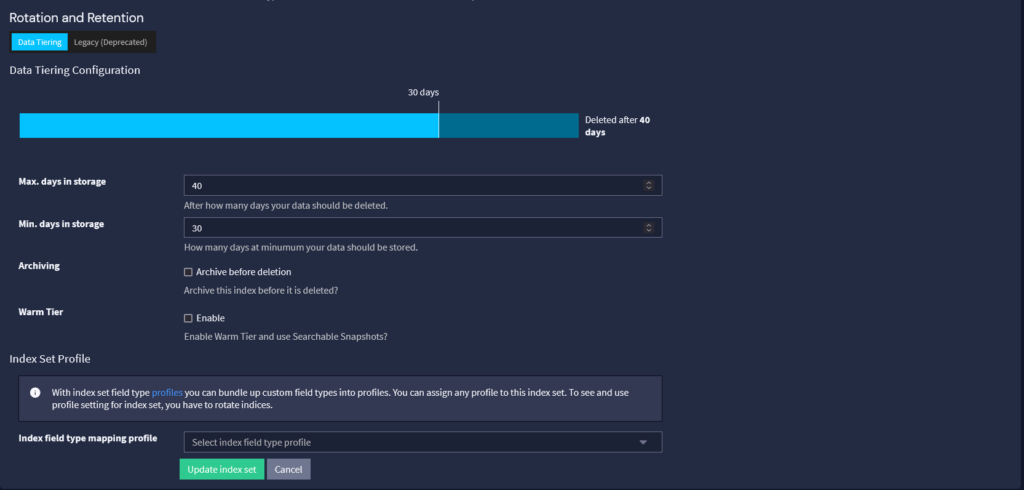

When you use streaming data, you can build a security data lake strategy that involves data tiering. You can reduce the total cost of ownership by optimizing the balance of storage costs and system performance. For example, you could have the following three tiers of data:

- Hot: data needed immediately for real-time detections

- Warm: data stored temporarily in case you need it to investigate an alert

- Cold: long term data storage for historical data or to meet a retention compliance requirement

When you can store cold data in a cheaper location, like an S3 bucket, you reduce overall costs.

Improve Detections

Streaming data enables you to parse, normalize, aggregate, and correlate log information from across your IT environment. When you use real-time data, you can detect threats faster, enabling you to mitigate the damage an attacker can do. The aggregation and correlation capabilities enable you to create high-fidelity alerts so you can focus on the potential threats that matter to your systems, networks, and data. Additionally, since streaming data is already processed, you can enrich and integrate it with:

- Threat intelligence

- MITRE ATT&CK Framework techniques and subtechniques

- Sigma rules

Apply Security Analytics

Security analytics are analytics models focused on cybersecurity use cases, like:

- Security hygiene: how well your current controls work

- Security operations: anomaly detection, like identifying abnormal user behavior

- Compliance: identifying security trends like high severity alerts by source, type, user, or product

For example, anomaly detection analytics identify behaviors within data that do not conform to expected norms, enabling you to prevent or detect unauthorized access or potential data exfiltration attempts.

Graylog Security: Real-time data for improved threat detection and incident response

Graylog Enterprise and Security ensures scalability as your data grows to reduce total cost of ownership (TCO). Our platform’s innovative data tiering using data pipeline management capability facilitates efficient data storage management by automatically organizing data to optimize access and minimize costs without compromising performance.

With frequently accessed data kept on high-performance systems and less active data in more cost-effective storage solutions, you can leverage Graylog Security’s built-in content to uplevel your threat detection and response (TDIR) processes. Our solution combines MITRE ATT&CK’s knowledge base of adversary behavior and vendor-agnostic sigma rules so you can rapidly respond to incidents, improving key cybersecurity metrics. By combining the power of MITRE ATT&CK and sigma rules, you can spend less time developing custom cyber content and more time focusing on more critical tasks.

To learn how Graylog can help you cost-effectively optimize your telemetry, contact us today or watch a demo.