Welcome to part two of a three-part series on trend analysis of log event data. Today, we will explore how to perform, using Graylog, a few of the types of trend analysis discussed previously.

As I said before, trend analysis provides rich information. Trends can yield insights into the operational and security health of your network that are otherwise difficult to discern.

One of the advantages Graylog has over other log management solutions is the ease of analysis. From a simple query language to one-click visualization tools that can be immediately turned into dashboards for ongoing monitoring, Graylog makes analysis easier.

Note that these examples are very simple, meant only to introduce the concepts involved. They are not meant to be completely realistic nor are they inclusive. Much of your real-world analysis will be based on much more complex queries from a wide variety of sources.

Overall Event Volume

Because trend analysis involves looking at events over time, it lends itself to graph visualizations. For example, if you want to look at the total number of events, you can run a query over any relative period of time, “Last 7 Days” for example. You could also specify a range between two dates. The histogram is created automatically in the search window for each query you run.

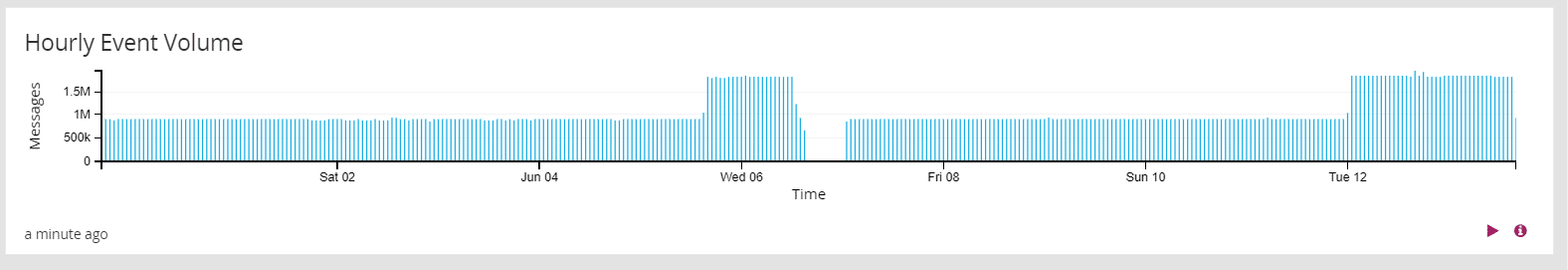

These histograms make spotting anomalies in overall event volumes a breeze. For example, in the graph below, we see that there are three large variations from what is otherwise a very steady event rate.

The first thing that stands out is the two large spikes. Our average event volume is a little less than one million events per hour over a two-week period. However, on two occasions, our event volume suddenly doubles, and remains that high for a day or longer.

The second thing we notice is that after the first spike, there is a period where our event rate drops well below the average. In fact, it appears that we received no events for an extended period.

What does this tell us? Nothing definite yet. It does indicate, however, that we need to do some more digging. The spikes could represent a period when our network was under attack, or they could indicate a normal period of increased events due to a legitimate business process. The gap in collected events could indicate a failure of our log management system or our network. But, they could just as easily represent a planned outage for maintenance of those same resources.

As with most trend analysis, the graph does not give us black and white answers, but it does show us areas that may indicate problems and give us clues about where (in time) to look to uncover them. If they turn out to be legitimate, we can make note of them to save time in the future.

Taking The Long View

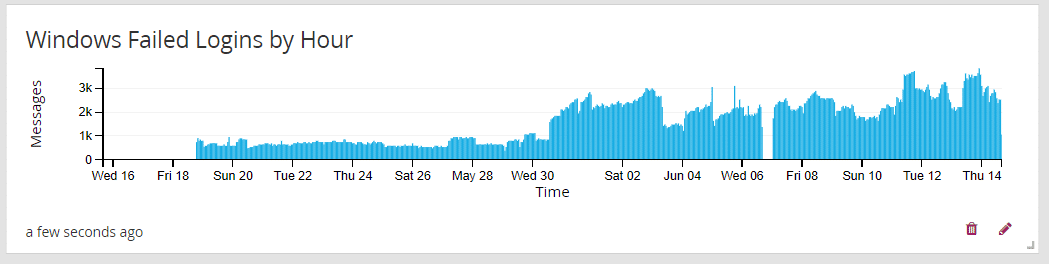

Sometimes patterns are only visible over a longer period of time. For this example, we are looking at Windows failed login events over the last 30 days. To create this graph, we write a very simple query, event_id:4625. This gives us a histogram that defaults to Weeks. If we click on the Hours link, it shows us a graph like this:

It’s immediately apparent that there is an abrupt and significant increase in the number of failed login events on or about the 31st. Furthermore, it stays higher. This certainly warrants investigation. The most common cause for this is expired service account or user credentials, but it could also indicate the presence of attackers trying to access internal resources as part of their reconnaissance of our network.

Analyzing Values Within Events

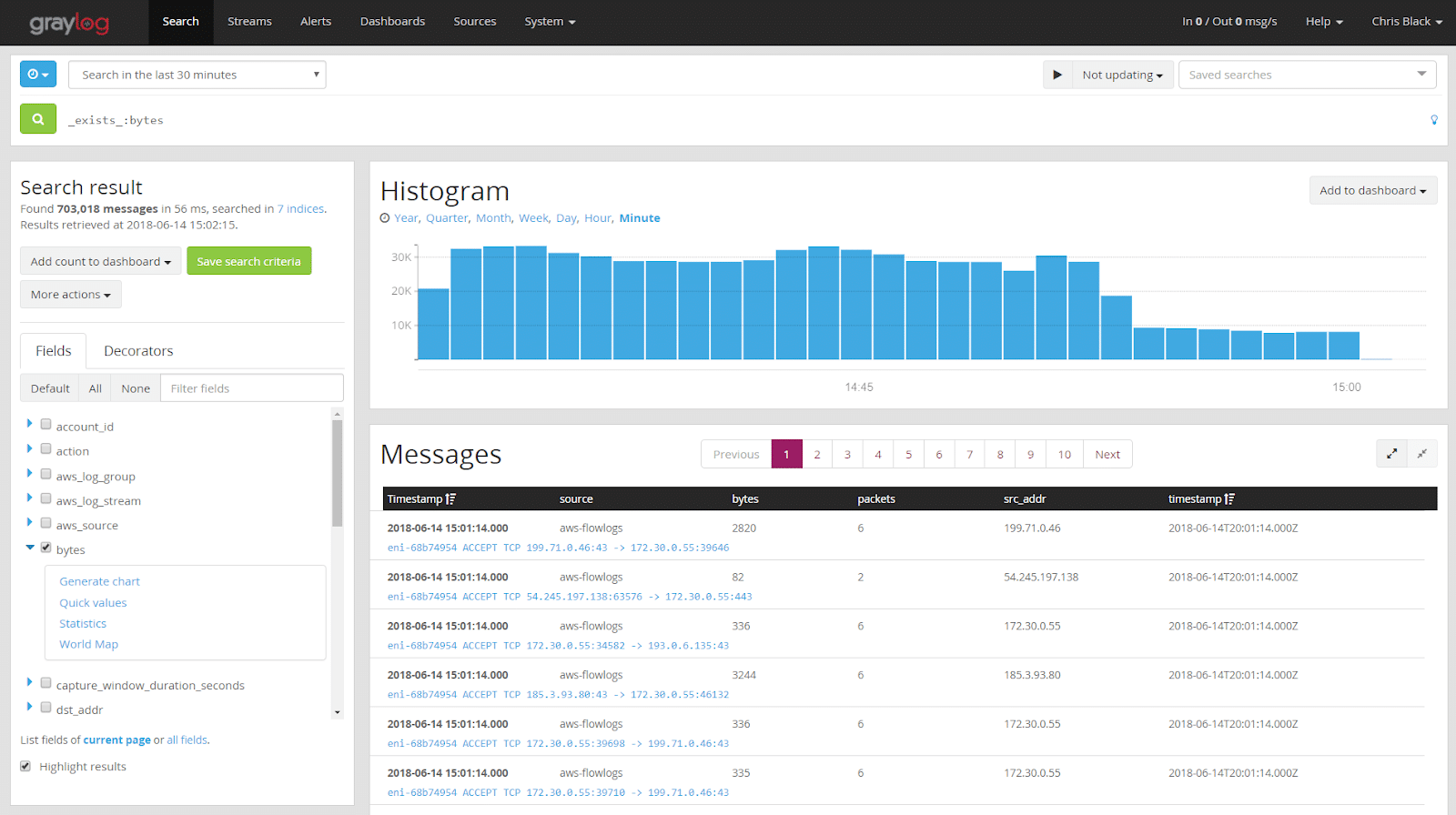

While overall volume of events can be indicative, applying trend analysis to values within the logs is also useful. For example, we could look at the total amount of data transferred out of our network over a given period. As with our previous example, large spikes or gaps could be indicative. To create this graph in Graylog, we wrote a simple query: _exists_:bytes_out. This query tells Graylog to include only events where the field bytes_out is present in the event. The graph would work without that filter, but for best practice (performance) reasons, we want to narrow our search to just those events that contain relevant data.

When we run the query, Graylog returns the familiar histogram, but also gives us a list of fields in the left pane. These are the fields that are present in the events that matched our query.

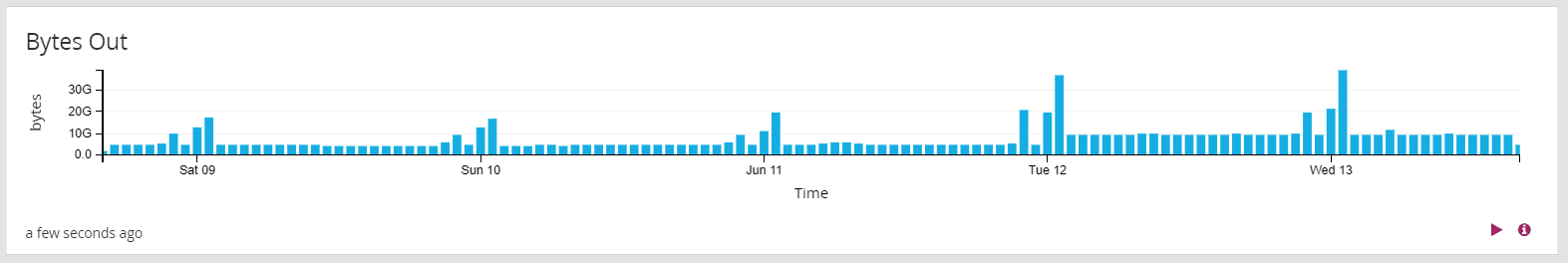

To create a graph, we click on the arrow next to the field name we want to use, in this case bytes, and click Generate Chart. The resulting chart looks like our default histogram, counting only the total number of events. We need to sum the values within the field. To do this, we click on the Customize button and choose Value > Sum. That gives us a graph that looks like this:

In this graph, we see a steady rate of outgoing data volume, punctuated by occasional spikes. The spikes could indicate a problem, such as data exfiltration, but their regularity and the fact that the first three are very similar in volume suggest it may be a regularly occurring event. Here, we might only do a cursory check to make sure it looks legitimate, were it not for the fourth and fifth occurrences, where the volume of data increases by almost 50%. As analysts, we would want to understand why those days were higher. We might also want to run the same search over a longer period of time to see if that pattern has been visible in the past.

More Than The Sum…

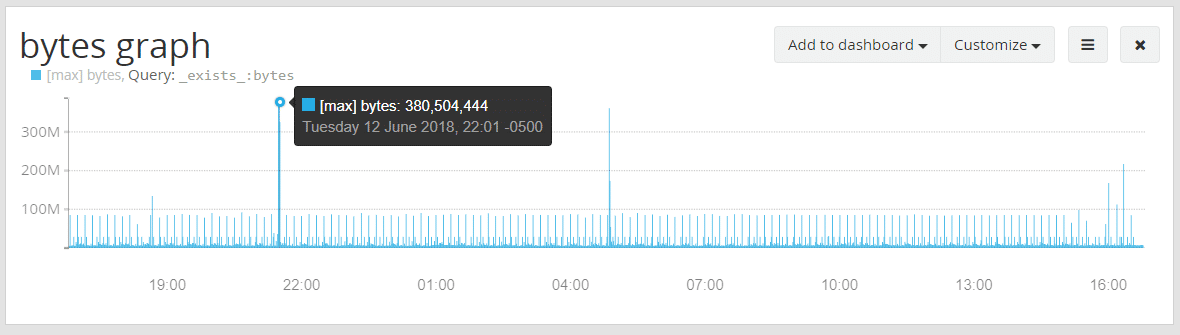

Another way to look for anomalies is to use one of the other statistical functions built into Graylog. For this example, we are looking at the last day’s events, but at a resolution of 1 minute. We also customized this graph to show the Max Value for this field per minute.

When we do this, we see several large spikes, where outgoing data volume more than triples the average for a single flow. Again, the cause may be benign, but this was not visible until we chose to look at the data in this way. We could use the Min value to find examples where the lowest value recorded is higher or lower than we would expect.

Conclusion

We have described a few of the ways events may be analyzed over time to reveal things that might not be otherwise apparent. These help to illustrate the power of Graylog to grant greater visibility and insight for analysts and managers alike.

In Graylog version 3.0, we will introduce new forms of analysis and new tools for visualization. Part 3 of this series will introduce those and provide a few more examples of how to get more value from Graylog. Watch this space as the release gets closer!