Starting with Graylog v2.3, we’ve added support for Elasticsearch 5. As you may know, Elasticsearch 5 allows the use of the hot/warm cluster architecture.

WHAT IS THE HOT/WARM CLUSTER ARCHITECTURE AND WHY IS IT IMPORTANT TO GRAYLOG?

In their blog post, Elastic recommends to use time-based indices and a tiered architecture with 3 different types of nodes (Master, Hot-Node and Warm-Node) when using elasticsearch for larger time data analytics use cases.

The practical benefit of this architecture is the same as that of any other tiered storage architecture used in data processing. Namely, maximizing speed and minimizing cost by placing your “most important” data on your fastest storage medium, while storing your “less important” data on commodity storage. It can also be used to accommodate large volume event sources that might overwhelm commodity hardware.

A disclaimer is in order. This example assumes that most of your analysis and searching will be against data that is very recent and that these logs are touched less frequently as they get further back from the present day.

In this post, we will describe one way to make use of the hot/warm architecture. With our example, you hold the last two days worth of collected events on Servers equipped with SSD storage. Older data is shifted over to servers using slower HDD to store older data, before you eventually archive indices to your slowest storage medium.

Since you need to make changes to the Elasticsearch configuration and add some custom index templates, you cannot employ this on a hosted Elasticsearch Cluster such as AWS.

ELASTICSEARCH PREPARATION

To identify the different cluster members, we will set a node attribute on each node. This way, Elasticsearch can decide on what node indices are created. To make things easy, the attribute will be called box_type and will be set to hot or warm.

Just add node.attr.box_type: hot to the elasticsearch.yml of the fast nodes and node.attr.box_type: warm to nodes that should hold the older data.

We will follow the same naming convention that can be found in the elastic blog post so that you may jump between both posts as needed.

After these settings are added to the elasticsearch configuration, you need to restart your Elasticsearch node.

GRAYLOG PREPARATION

Below, we will describe only the steps necessary to prepare the default index used by Graylog. If you have additional indices, repeat the steps for each index.

The index routing needs to be set in a custom index template. That might be used for some additional field mappings or to set the famous refresh_interval. We recommended it be set to 30 seconds. More details about that can be found in the documentation.

Following our documentation, create a file graylog-custom-mapping.json with the following content:

{

“template”: “graylog*”,

“settings”: {

“index”: {

“routing”: {

“allocation”: {

“require”: {

“boxtype”: “hot”

}

}

},

“refresh_interval”: “30s”

}

}

}

Push that into your Elasticsearch cluster with the following command:

curl -X PUT -d @’graylog-custom-mapping.json’ ‘http://localhost:9200/_template/graylog-custom-mapping?pretty’

Now, each newly created index will be placed only on nodes that have the attribute hot set, with the refresh_interval set to 30 seconds.

Keep in mind, the number of shards and replicas should match those hot nodes and not the count of all Elasticsearch nodes you have available. Check your index set configuration here.

HOUSEKEEPING

The above configuration allows us to specify that only the Elasticsearch nodes with the attribute hot are used. We also need to move the data older than two days over to the slower nodes with the attribute warm. For that, we use a tool called curator that can be used to do some housekeeping on your Elasticsearch Cluster.

INSTALL AND CONFIGURE CURATOR

First install Curator on your system. Then, create a configuration file – we placed ours in ‘~/.curator/curator.yml’ to be able to just run the command curator without the need for additional parameters.

The next step is to create a curator action file. For us, it looks like this:

actions:

1:

action: allocation

description: “Apply shard allocation filtering rules to the specified indices”

options:

key: box

type: require

wait

completion: true

timeout

action: false

filters:

– filtertype: pattern

kind: prefix

value: graylog_

– filtertype: age

source: creation

That action file will migrate the indices to the warm nodes, after the specified time. The time setting is unit_count.

This curator configuration includes additional forcemerge of the segments. That will do some optimization on the elasticsearch index.

RUN CURATOR

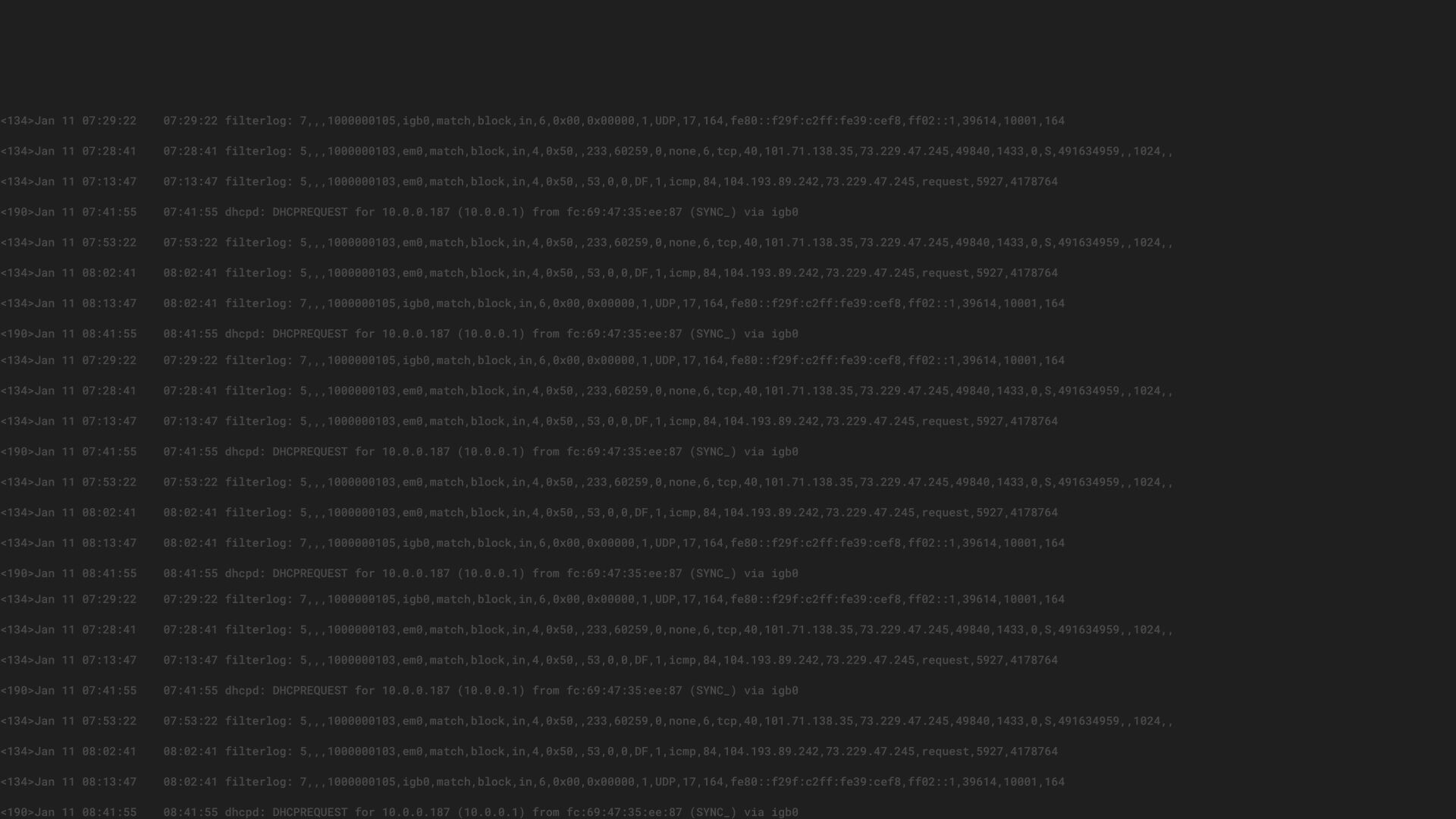

Now run the command, first with the option –dry-run to check what would be changed. The output can be similar to the following:

$ curator –dry-run curator_hw_el_housekeeping.yml

2017-07-27 10:48:53,744 INFO Preparing Action ID: 1, “allocation”

2017-07-27 10:48:53,749 INFO Trying Action ID: 1, “allocation”: Apply shard allocation filtering rules to the specified indices

2017-07-27 10:48:53,795 INFO DRY-RUN MODE. No changes will be made.

2017-07-27 10:48:53,795 INFO (CLOSED) indices may be shown that may not be acted on by action “allocation”.

2017-07-27 10:48:53,796 INFO DRY-RUN: allocation: graylog_160 with arguments: {‘body’: {‘index.routing.allocation.require.box_type’: ‘warm’}}

2017-07-27 10:48:53,798 INFO Action ID: 1, “allocation” completed.

2017-07-27 10:48:53,798 INFO Job completed.

If that reflects the wanted changes, remove the –dry-run and change the allocation.

$ curator curator_hw_el_housekeeping.yml

2017-07-27 10:57:56,552 INFO Preparing Action ID: 1, “allocation”

2017-07-27 10:57:56,556 INFO Trying Action ID: 1, “allocation”: Apply shard allocation filtering rules to the specified indices

2017-07-27 10:57:56,582 INFO Updating index setting {‘index.routing.allocation.require.box_type’: ‘warm’}

2017-07-27 10:57:56,623 INFO Health Check for all provided keys passed.

2017-07-27 10:57:56,623 INFO Action ID: 1, “allocation” completed.

2017-07-27 10:57:56,623 INFO Job completed.

And Presto! Now Elasticsearch allocates the indices to all nodes that have box_type set to warm. Depending on the size of that indices, it may take a while for Elasticsearch to finish the allocation.

To determine if that allocation has been set successfully, you can query the API of Elasticsearch.

curl -XGET ‘localhost:9200/graylog_*/_settings/index.routing.allocation.require.box_type*/?pretty’

You could also check if the allocation is already finished with the following command.

curl -XGET ‘localhost:9200/_cat/shards?pretty’|grep -v STARTED

It will output no shards if all shards are allocated to the nodes and started without any issues.

AUTOMATE CURATOR

Because a manual process is a process that will fail eventually, you will want to automate the migration and reallocation process. If you feel you have everything in place and have tested your configuration, configure curator to run as a cron job. The cron file can look like the following:

0 12 * * * curator /usr/local/bin/curator –config /home/curator/.curator/curator.yml /home/curator/.curator/curator_hw_el_housekeeping.yml >> /home/curator/cron_log/curator_hw_el_housekeeping.log 2>&1

ADDITIONAL IDEAS

With the multiple indices Graylog can handle since version 2.2 you would also be able to use the allocation awareness discussed above to pin indices to dedicated Elasticsearch nodes. That would allow you to assign a particular workload to a particular node.

For example, this might allow you to create super fast Elasticsearch nodes that take all your Netflow data but use more modestly sized boxes for all other logs that aren’t coming in at the same volume.

Using this technique does not interfere with your index rotation and index retention but it might have impact on the performance of your Elasticsearch Cluster and, with that, your larger Graylog environment.

CREDITS

I need to thank @BennacerSamir for this posting and the fearless @ooesili who runs this in production and pointed me to that option.