Cybersecurity teams are no longer circling an AI bubble. Rather, they are staffing inside it, buying within it, and getting measured by it.

This matters because bubbles create a predictable trap: expectations are set higher than teams truly can deliver. Cato Networks CEO Shlomo Kramer recently told Business Insider the market is experiencing an AI bubble driven by heavy investment and AI-driven profit improvements, which he expects to unwind.

A correction will not pause attacker activity. It will not pause audits. It will not pause customer expectations. It will squeeze time, budgets, and patience at the exact moment security operations needs steadiness.

The winning move is preparation that holds up whether the bubble keeps inflating or starts deflating. That preparation looks like execution discipline. Clear scope. Fewer moving parts. Defensible decisions. A measurable path from telemetry to risk reduction.

AI Bubble FOMO is an Implementation Problem that Lands in the SOC

AI FOMO rarely starts in the SOC. It starts as a business narrative. Boards want productivity gains. Leaders see competitors shipping AI features. A tool gets purchased, and the hard part gets handed to IT and security.

Nick Selby writes in Inc. that many AI implementations fail because executives rush deployment without addressing fundamental operational and risk concerns. He also points out that AI tools can be less predictable than traditional enterprise software, which raises the cost of shortcuts.

This pattern shows up in security operations as integration debt.

- New tools produce output that still needs verification

- Vendor security posture becomes a bigger part of your risk profile

- “AI features” create more places for sensitive data to leak

- Analysts spend time reconciling context instead of closing cases

That is how AI FOMO becomes a security operations tax.

How a Bubble Correction Shows Up Inside Security Operations

For CISOs and SOC analysts, a market correction rarely arrives as one dramatic event. It arrives as friction.

Budgets get re-justified line by line. AI copilots face tougher ROI scrutiny. Procurement shifts toward proof. Vendors that depended on hype get consolidated or deprioritized. Teams inherit integrations that never became reliable workflows.

Dark Reading captured the mood shift at the end of 2025, pointing to rising fears about an AI bubble, stock dips, and multiple studies showing companies still falling short of expected ROI from generative AI pilots.

Teams that prepared early keep coverage and credibility even when the market mood changes.

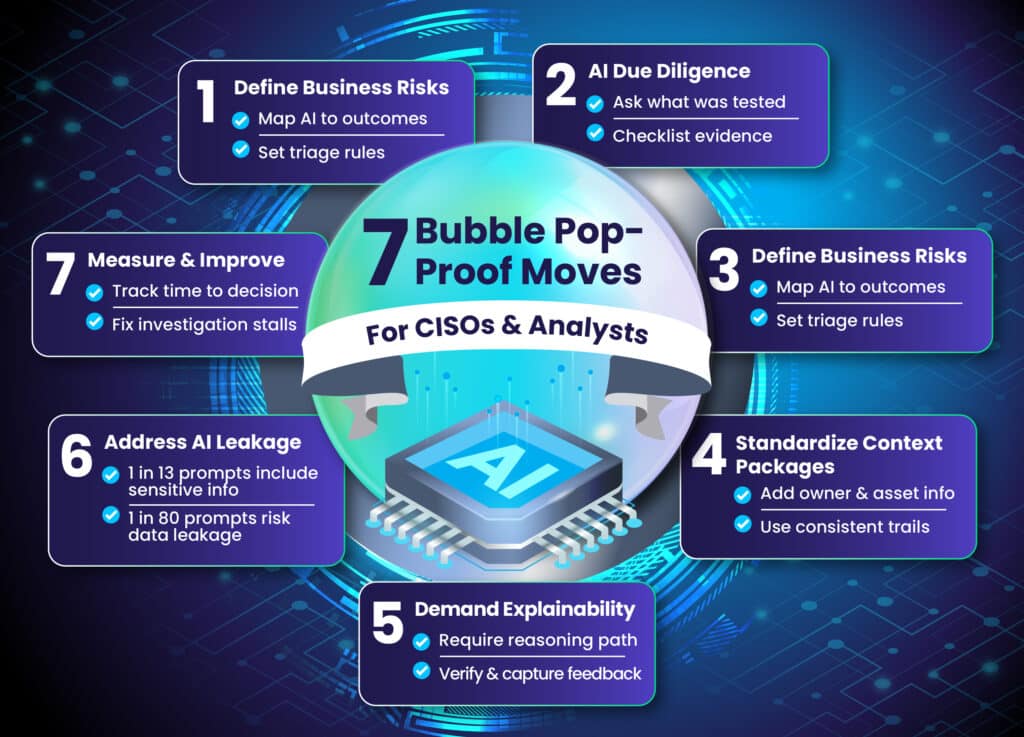

Seven Bubble Pop-Proof Moves for CISOs and Analysts

1. Define the business risks AI must reduce

Prepared programs start with a short list of risks that leadership already recognizes.

- For CISOs

Write down the top business risks security is accountable for, then map AI use to those risks with measurable outcomes. Examples include faster containment of credential abuse, reduced dwell time for high-impact incidents, and shorter audit evidence cycles. - For analysts

Translate those risks into investigation priorities, triage thresholds, and escalation criteria so the entire team runs the same playbook under pressure.

2. Treat AI due diligence like vendor risk, not feature shopping

AI products often reuse familiar security language. Sometimes the definitions change.

Terms like “red teaming” and “vulnerability management” can mean different things in AI contexts than they do in traditional security practice. That gap creates false confidence during procurement and review.

- For CISOs

Require plain-language definitions of security claims, plus evidence. Ask what was tested, how it was tested, and what data does the tool access in production. - For analysts

Maintain a short checklist for new tools and new features. Access scope, logging coverage, decision traceability, and safe failure modes.

3. Build a telemetry plan that survives budget scrutiny

If a tool loses funding, security operations still needs the underlying visibility and evidence.

- For CISOs

Decide what data must be retained, how long it must be searchable, and what query performance investigations require. Budget for predictable retention so compliance and forensics stay stable during a correction. - For analysts

Push for consistent fields, timestamps, identity context, and asset context across sources. High-quality data reduces ambiguity and eliminates rework.

4. Standardize context packages that travel with the alert

Speed comes from having the right context ready, not from having more alerts.

- For CISOs

Set a minimum context standard for high-priority detections. Asset criticality, owner, identity ties, recent changes, and related activity should be part of the default investigation view. - For analysts

Build repeatable event procedure templates that ensure equivalent same evidence is leveraged every time for the same incident, using the same decision trail every time, removing investigation dead ends.

5. Make AI explainability and supervision mandatory for action

When a decision affects containment, escalation, or reporting, the reasoning must be visible.

- For CISOs

Learning our lessons from machine-learning, security teams do not operate well with black-box data science. Require every AI-driven detection, prioritization, or recommendation to show a reasoning path and supporting evidence. That supports audit readiness, executive reporting, and post-incident review. - For analysts

Treat opaque scoring as an indicator to validate, not a conclusion to act on. Capture feedback in the workflow so the system improves rather than repeating the same misses.

This is where preparedness pays off. A supervised workflow helps the team move fast without gambling on an untraceable output.

6. Treat AI data leakage and shadow AI as first-class risks

FOMO drives unsanctioned adoption. The SOC ends up defending systems and defending process at the same time.

Cybersecurity Dive reported that one in 13 generative AI prompts contained potentially sensitive information, and one in every 80 prompts posed a high risk of sensitive data leakage. The same report warned about unauthorized AI tools, data loss, and AI platform vulnerabilities as leading risks.

- For CISOs

Set policy and controls for approved tools, data handling, and access boundaries. Monitor usage. Enforce it. Build response paths for AI-driven data exposure. - For analysts

Watch for patterns that indicate leakage, such as sensitive snippets being pasted into external tools during incidents, or repeated use of unapproved assistants for investigation summaries.

7. Measure what holds up in a boom and in a correction

Prepared teams measure decision quality and time-to-clarity, then improve the parts that slow them down.

- For CISOs

Track time to confident decision and time to defensible evidence. Tie these to business impact such as reduced incident scope, reduced customer impact, and improved audit performance. - For analysts

Track where investigations stall. Missing context, inconsistent fields, tool switching, repeated validation steps. These become the next set of workflow fixes.

Shortcuts compound over time, with “speed” turning into expensive cleanup. The SOC feels that compounding effect first.

How This Lands for Enterprise, Mid-market, and SMB Teams

Enterprise teams face a coordination problem. Multiple SOC pods, distributed environments, and regulatory pressure create conditions where AI can either accelerate decisions or multiply disagreement. Pop-proof execution at scale means shared standards. Shared entity definitions, shared evidence requirements, shared workflows, and early consolidation plans.

Mid-market teams face the sharpest capacity constraint. They deal with sophisticated threats using smaller teams and they feel integration drag quickly. Pop-proof execution means focus. Fewer use cases, stronger data hygiene, tighter workflows, and supervised AI that reduces analyst effort this quarter.

SMBs and lean IT teams win by choosing simplicity and repeatability. Pop-proof execution means a short list of essential detections tied to business risk, clear guidance for triage, and tight control of AI tool usage. Small teams cannot absorb constant tuning.

Staying Effective Inside the Bubble

AI spending cycles will keep evolving. A SOC plan built on execution discipline stays stable regardless. It keeps visibility intact, keeps decisions defensible, and keeps analyst time focused on work that protects revenue, customers, and trust.

Prepared CISOs build a cohesive strategy tied to business risk, evidence, and cost control. Prepared analysts demand context, clarity, and workflows that reduce rework. Both groups win by seeing what matters faster and spending time on the actions that drive outcomes.

Bubbles inflate. Corrections happen. Yet, security operations still has to deliver. Pop-proof plans keep the SOC steady either way.

See explainable AI in Graylog and trace the evidence behind every decision.

Follow Graylog on LinkedIn for practical SOC guidance that holds up past the AI hype cycle.

VP of Product Management