Imagine sitting in an airport’s international terminal. All around you, people are talking to friends and family, many using different languages. The din of noise becomes a constant thrum, and you can’t make sense of anything – not even conversations in your native language.

Log data is similar to this scenario. Every technology in your environment generates log data, and information about the activities happening from logins to processing. However, each technology may use a different log file format or schema.

Data normalization organizes log information into a structured format, making it easier to analyze and interpret. When you understand what data normalization is and how to apply the principles to log data, you can more effectively and efficiently monitor your environment for abnormal activity.

What is data normalization?

Data normalization is the process of transforming dataset values into a common format to ensure consistent and efficient data management and analysis. By organizing data entries uniformly across fields and records, this process streamlines activities like data discovery, grouping, and analysis.

By normalizing data, organizations can conduct more effective investigations with high level benefits like:

- Improved data consistency and analysis efficiency

- Faster incident investigations

- Enhanced visibility into their environment

- Greater accuracy for data analytics models

What is normalization in logs?

Normalization in logs is the process of standardizing the log messages’ formats so that security tools can aggregate, correlate, and analyze data. The process involves the following components:

- Ingestion: taking the logs from their sources

- Normalization engine: converting the diverse formats

- Normalization rules: applying pre-defined regular expressions

- Customization: allowing for flexible rule application with custom variables

Log normalization’s primary benefit is transforming diverse data formats into a single, standardized format so that security teams can use the data for analytics, visualization, and reporting, often in a security information and event management (SIEM) system.

What does the normalization process look like for log data?

Most organizations opt for a centralized solution for managing log data normalization. While they follow the rules of data normalization, they typically automate the process around the following outcomes:

- Unify log structures: organizing entries so each log follows standardized formatting guidelines

- Eliminate redundancies: employing rules that remove duplicate data within the log file to reduce data storage costs and improve investigation speed

- Establish unique identifiers: assigning each log entry an identifier to make identification and correlation of security events faster

- Create logical relationships: breaking down complex logs into smaller, related tables so that analytics can correlate information more accurately

- Validate data integrity: checking log entries against the normalization criteria

What are the challenges normalizing log file data?

The normalization process poses several key challenges as security teams work to integrate different formats generated by various tools. For example, Windows Event Logs use a proprietary format and contain different fields compared to VPC flow logs. In an environment that contains both Microsoft and AWS technologies, normalizing the log file data is both critical to gaining insights and challenging to complete.

The key challenges security teams face when trying to normalize log data include:

- Diverse log sources: data from different vendors often includes mismatched formats and terminology which can make finding relationships and dependencies difficult

- Inconsistent standards: log fields have no formal standard which makes establishing a primary key difficult

- Complexity: differing formats that make identifying primary attributes and other dependencies time consuming and error prone

- High data volumes: log sources generating terabytes of data that make maintaining a common format labor-intensive

What are the benefits of log data normalization?

Log data normalization enables you to efficiently manage and analyze large amounts of log information, providing insights based on relationships between the data points. By creating a standardized format across the log data your environment generates, you gain the following benefits:

- Reduced storage costs: ensuring that logs are both compact and efficient by removing redundant or unnecessary fields

- Faster query response time: requiring less processing time by removing redundant or unnecessary fields

- Improving correlation: improving analysis by standardizing the formats for more accurate comparisons across different technologies and cybersecurity tools

Using Log Data Normalization to Improve Cybersecurity

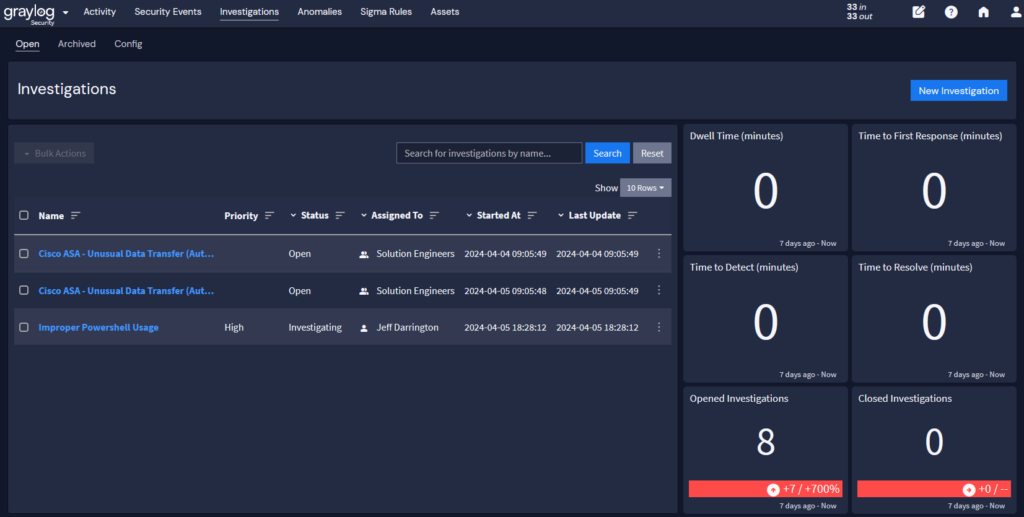

For operations and security teams, log data normalization reduces alert fatigue and enhances key metrics like mean time to detect (MTTD) and mean time to respond (MTTD) by making it easier to correlate data.

Improved risk scoring

With a standardized schema determining how data is formatted, you can add context to the log data, including information like:

- User identity

- Geographical location

- Device details, like hostnames or IP addresses

- Threat intelligence

This data enrichment provides additional context that improves risk scoring. For example, API risk scoring can take into consideration the immediate context regarding the activity and the intensity.

Reduced alert fatigue

Once your data is normalized, you can improve alert fidelity. When you combine this with a correlation engine that connects events intelligently, you create more detailed event definitions that provide more accurate alerts.

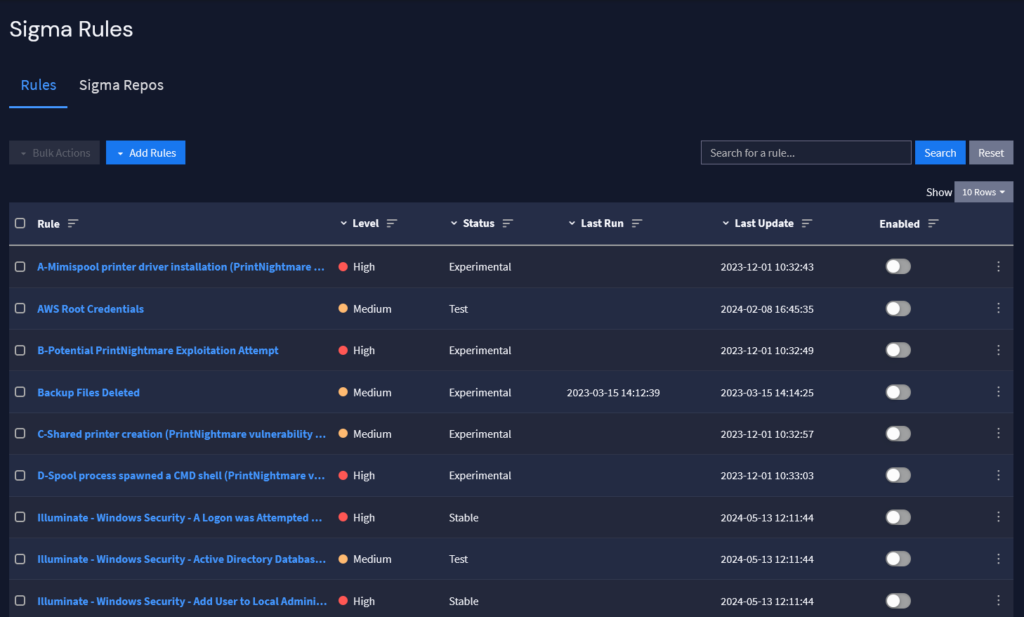

For example, with normalized log data, you can integrate Sigma rules with the MITRE ATT&CK Framework to improve threat detection and incident response (TDIR).

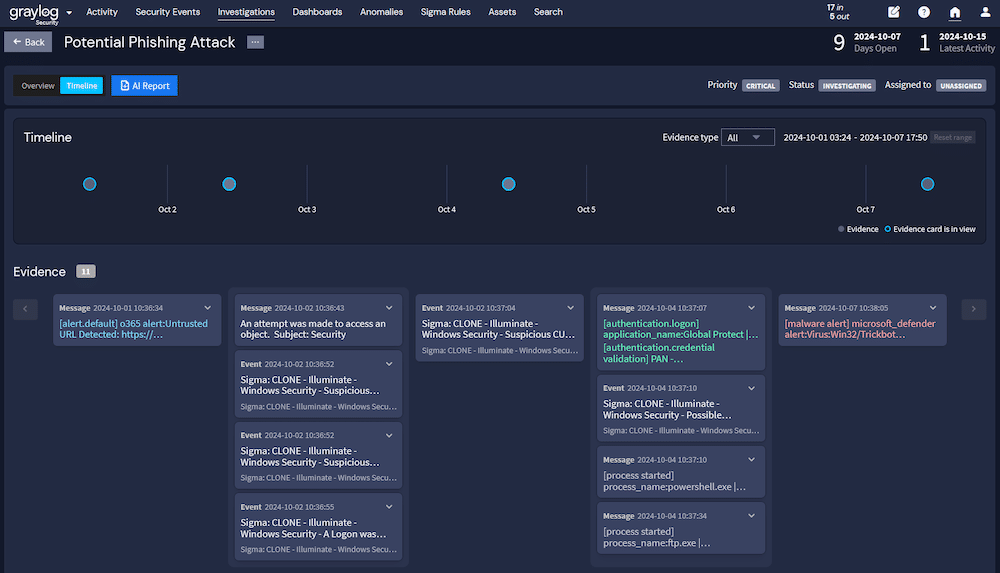

Faster investigations

Data normalization enables faster investigation times in two ways. First, the correlation that improves detections also powers your search capabilities once you detect a potential incident. Second, by normalizing the data, your searches are lightning fast since your technology no longer needs to process queries against empty or duplicative log fields.

Finally, the structured data enables you to understand an event’s timeline which is critical when you have to write a cyber incident report.

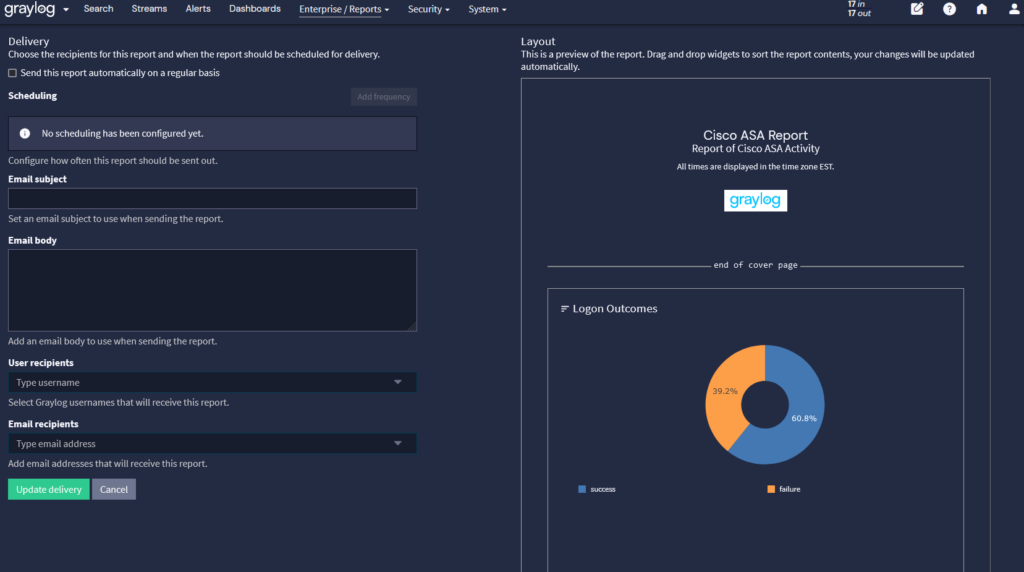

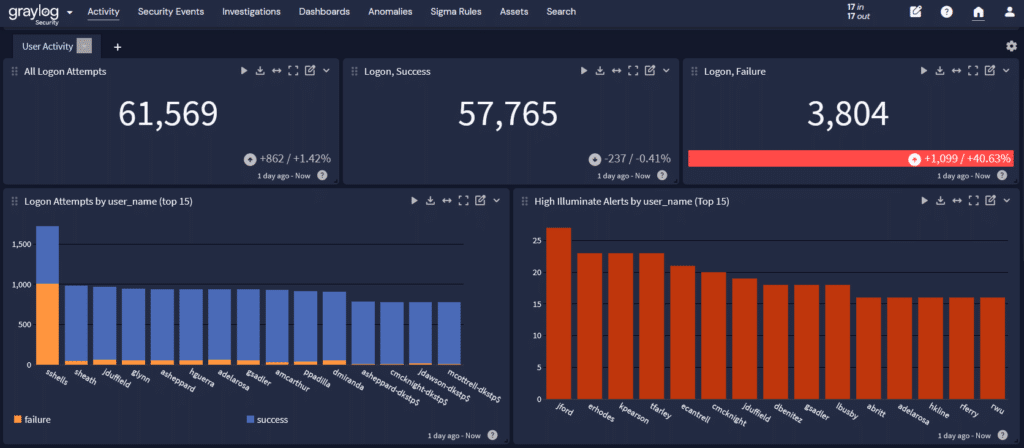

Customized dashboards and reporting

The data aggregation and correlation that log normalization enables also gives you a way to make visualizations. You can create dashboards around industry-standards or other use cases that provide key metrics and data points. They offer real-time visibility into system performance, security threats, and operational trends.

Further, you can transform these dashboards into easy-to-understand reports for:

- Engaging in in-depth analysis

- Providing during compliance audits

- Reviewing historical trends

- Tracking changes over time

- Identifying patterns or anomalies

Graylog Enterprise, Security, and API Security: Normalized Log Data for Comprehensive TDIR

The Graylog Extended Log Format (GELF) allows you to collect structured events from anywhere, and then process them in the blink of an eye. Our log file parsing capability and data normalization with GELF enables you to automate the process of moving from unstructured to structured data, even when data types are absent.

This data normalization process in Graylog Security, combined with our built-in content mapped to mission-critical cybersecurity and privacy requirements enables you to get more value from your logs – and to do it faster. Our platform offers prebuilt content mapping security events to ATT&CK so you can enhance your overall security posture with high-fidelity alerts for robust threat detection, lightning-fast investigations, and streamlined threat hunting.

To learn how GELF and Graylog can improve your operations and security, contact us today for a demo.