What makes data structured or unstructured and how does that affect your logging efforts and information gain? Below we’ve provided a comparison of structured, semi-structured, and unstructured data. Also below, we discuss ways to turn unstructured data into structured data.

What Is the Difference Between Structured, Semi-Structured, and Unstructured Data?

As the names suggest, data can come in various forms that differ in the level of structure and rules pertaining to the way it is stored.

There are three types of data:

Structured

Semi-structured

Unstructured

Structured data

This type of data is highly organized and stored in a predefined format. The most typical examples of structured data are relational databases and spreadsheets.

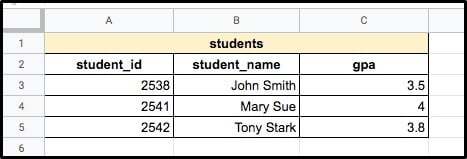

Example:

This is an example of a Google spreadsheet where each column represents a different type of information (student ID, student name, and GPA) and each row represents one student and information related to them.

Semi-structured data

This type of data falls in between structured and unstructured data. There is no formal structure like in relational databases or data tables, but there are tags or other kinds of markers that separate semantic elements and define the hierarchy of records and fields within the data.

Examples of semi-structured data include JSON and XML files.

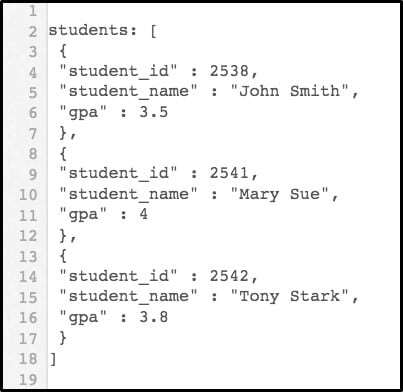

Example:

This is an example of a .json file containing information on three different students in an array called students. Data is represented in name-value pairs separated by commas, and curly braces indicate different objects (in this case, students) within the array.

Unstructured Data

Unstructured data is all data that isn’t organized in a pre-defined manner. Examples of unstructured data include web pages, emails, videos, Facebook posts, etc.

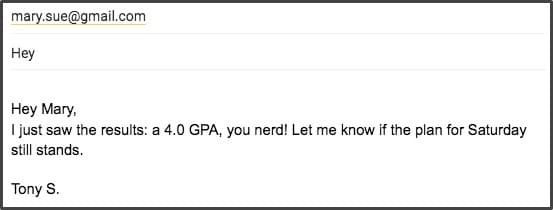

Example:

Although this email contains some information about student names and GPA, it is very hard to extract it because there is no formal structure. Imagine having to manually go through thousands of emails like these trying to extract valuable information – this is why it is important to structure data in order to work with it.

WHAT INFORMATION CAN YOU EXTRACT FROM UNSTRUCTURED DATA USING LOGGING TOOLS?

We live in an era of big data. There are now sources of unstructured information that grow unimaginably quickly, surmounting our wildest guesses in terms of size. For instance, the average daily number of tweets is 500 million and there are currently over 1,745 billion online websites in the world.

Due to this exponential data growth, there are now many complex processes attributed to the goal of extracting information from unstructured data, such as data mining and data analytics.

While there are all sorts of information that can be extracted from data, our focus is on data logs. The information we can get from data logs helps us with root cause analysis, preventing cyberattacks, troubleshooting, etc. We should be able to extract all kinds of useful information – the timestamp of an event, error or user ID, IP address, type of request, and so on.

Most logs are formatted according to Syslog format rules since it is the most popular message logging standard. Unfortunately, some devices generate logs that look very similar to Syslog but don’t comply with its formatting rules. When a parser tries to parse this kind of log, it leads to errors and unsuccessful information extraction. Unstructured data makes it harder to get the information we need.

HOW CAN YOU TRANSFORM UNSTRUCTURED DATA INTO STRUCTURED DATA?

While data storage format is crucial, you can also add to the structure by standardizing the way you fill out the data across your organization. For example, by making a list of rules about the way data is entered. If you depend on outside sources to provide you information, make sure to standardize labels and field names they need to fill out. For more details, check out 3 steps to structuring logs effectively.

LOGFILE PARSING

Log file parsing is the process of splitting data into chunks of information that are easier to manipulate, and grouping them in a meaningful way (for example, grouping all timestamps or user IDs together). A parser can easily work with a data format it is familiar with because it has tags and hierarchy rules that tell the parser what kind of information is stored where. Things get complicated with unstructured data because due to different formatting rules (or no rules at all) the parser can’t read the log file well.

USING LOG MANAGEMENT TOOLS TO TRANSFORM UNSTRUCTURED DATA

Different log management tools use different techniques to try to overcome these differences and enable log file parsing and data analytics for as many different formats as possible.

GELF

The first attempt that Graylog made to override formatting differences in logs that are similar to the Syslog logging protocol was by making GELF (Graylog Extended Log Format). It is a log format that provides structured logs while avoiding common shortcomings of the Syslog protocol. Developers could write to if they want to add structure to their logs, effectively preventing the need for dealing with unstructured data.

EXTRACTORS AND PIPELINES

Unfortunately, there are so many different devices and formats that it is impossible to create a parser that would work well on all of them. This is why Graylog came up with the concept of extractors. In short, extractors let you instruct Graylog nodes on how to extract data from any text format to message fields. Once data is structured into fields, it is much easier to perform data analysis, search, or any kind of data manipulation. Creating extractors is possible via either Graylog REST API calls or from the web interface using a wizard.

Extractors are one way to parse data, and it’s mostly used by new users since they’re much easier to implement than other solutions. If you want a more efficient alternative, Pipelines can be used to enrich and parse your data. They allow complete message processing – you can clean up your logs, change field names, or add lookup tables.

It is up to you to decide which method to use to structure your data, as long as it proves successful in providing the desired output.