What is log file parsing and how does structuring your logs affect parsing efficiency? Learn the difference between structured and unstructured logs, the basics of the JSON log format, what kind of information you can get when you parse log files, and which tools and utilities to use to perform log file parsing.

STRUCTURED VS. UNSTRUCTURED LOGS

According to Wikipedia, unstructured data is “information that either does not have a pre-defined data model or is not organized in a pre-defined manner.” Log files are unstructured text data by default. Therefore, it’s harder to look for useful information within unstructured logs than within structured logs, and the former is more time-consuming.

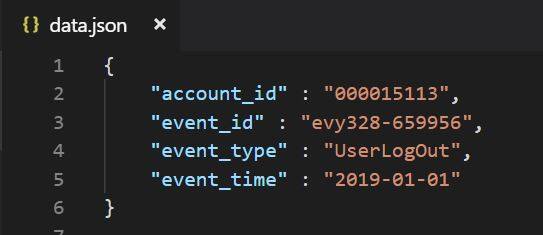

In order to turn unstructured logs into structured logs, you need to write them in any kind of structured format. Today, most people use JSON (JavaScript Object Notation) to structure their data. Even though JSON is derived from JavaScript, it is a language-independent data format, so it can be used in a variety of programming languages (C, C++, C#, Java, JavaScript, Perl, Python, and others) and allows for transmitting data between web applications and servers.

JSON Syntax Basics:

- Each object is represented inside curly braces

- Data within an object is represented in name-value pairs

- Names and values are written within quotes and separated by a colon

- Name-value pairs are separated by a comma

- Arrays are represented with square brackets

One of the main advantages of structured logging is facilitated log parsing, and we will explain this in more detail later on. By structuring your logs, you can choose any custom values (time, error type, error message, user, etc.) to include in the logs and to search by. Although search by value can be performed on unstructured logs as well, it is a rudimentary technique that is not recommended. The optimal way to benefit from collecting data logs is to perform log analysis, which requires structured logs for the best result. In cases of complex systems, unstructured logs can even prevent the engineers from detecting the error cause since tracking it down may prove too complicated and nearly impossible to perform unless you’re using log analysis software. Since writing structured log files is just a matter of sticking to a predefined format, it doesn’t take more time to write structured logs, which is why you should consider switching to structured logging if you aren’t using it yet.

Pros of Structured Logs:

- A uniform format that is easy to follow

- Faster log search

- More options for log analysis

- Better use of logged data

WHAT IS LOG FILE PARSING?

Parsing is the process of splitting data into chunks of information that are easier to manipulate and store. For example, parsing an array would mean dividing it into its elements. When talking about log file parsing, the same principle is applied. Each log contains multiple pieces of information stored as text, and the goal of parsing is to recognize and group them in a meaningful way (for instance, by grouping all user IDs that are contained in a log file).

A data parser is a type of software that performs the parsing, which is typically done in two subsequent steps: allocation and population of data structures, and execution.

Two Main Parsing Steps:

- Primary – Allocation and population of data structures

- Secondary – Execution (of logic, API calls, etc.) based on data in the structures

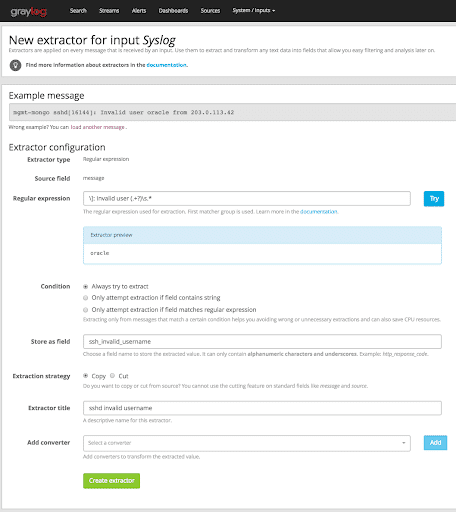

Not all log file parsers work the same, and they can’t be universally applied to all log formats (which is another reason to use structured log files). A typical parser automatically parses formats it’s familiar with, such as JSON or XML, but requires the application of parsing rules for other, non-standard formats. This way the user can customize the way the parser is working by defining parameters like matching rules and filtering. Graylog software uses extractors to enable the customization of log file parsing for data that doesn’t come in a standard format.

WHAT ARE GRAYLOG EXTRACTORS?

One of the difficulties that comes with different logging protocols is that there is no log parser that could accurately read them all. For instance, there is the syslog protocol that has been the standard since the ‘80s, but many devices like routers and firewalls send logs that are similar to syslog but do not comply to all syslog format rules. When logs like these go through a parser, the parser can’t read them well due to the formatting differences. This is why Graylog introduced the concept of extractors, which allow users to instruct Graylog nodes on how to extract data from any text in the received message, regardless of the format. You can learn more about centralizing logs with rsyslog and parsing logs with Graylog extractors in an engaging article by Brendan Abolivier.

UTILITIES USED TO PARSE LOG FILES

The number of tools used for automated log parsing has been on the rise in the past few years, following the growing need for log analysis. As system complexity grows larger, traditional methods like manual log file parsing have been rendered impractical, making room for new automated log parsing tools. Because each log parsing software uses different parsing techniques, the performance can greatly vary. The three most common parameters to take into account when comparing log file parsing tools are accuracy, efficiency, and robustness. A good way to test different parsing software solutions against each other is to evaluate their performance on the same log dataset.

Github is a great source of open source utilities, and there you can find various scripts, tools, and toolkits for log file parsing. Commercial log analysis software packages come with advanced parsing utilities that let users customize the parsing process. In conclusion, there is a wide selection of both free and paid log parsing software, so try a few parsing utilities and discover which one suits your needs best.