In a world where IT infrastructure becomes more complex with each additional layer, knowing what is happening in your infrastructure becomes more complicated every day.

Having a single person who understands every component is not very common and specialists build expertise in specific parts quickly. But a few components of every infrastructure are essential to protecting your entire environment. Monitoring your infrastructure is one of them–you need to know what is running and where the potential problems are. Many of those issues can be fixed automatically by defined actions in the monitoring system.

Security, the neighbor of monitoring, has similar needs and a few additional ones. While the focus for monitoring is to maintain services and keep them running, the focus of security is to understand what is happening. Followed by the prediction of what might happen next and taking steps to prevent holes in the protection of your environment.

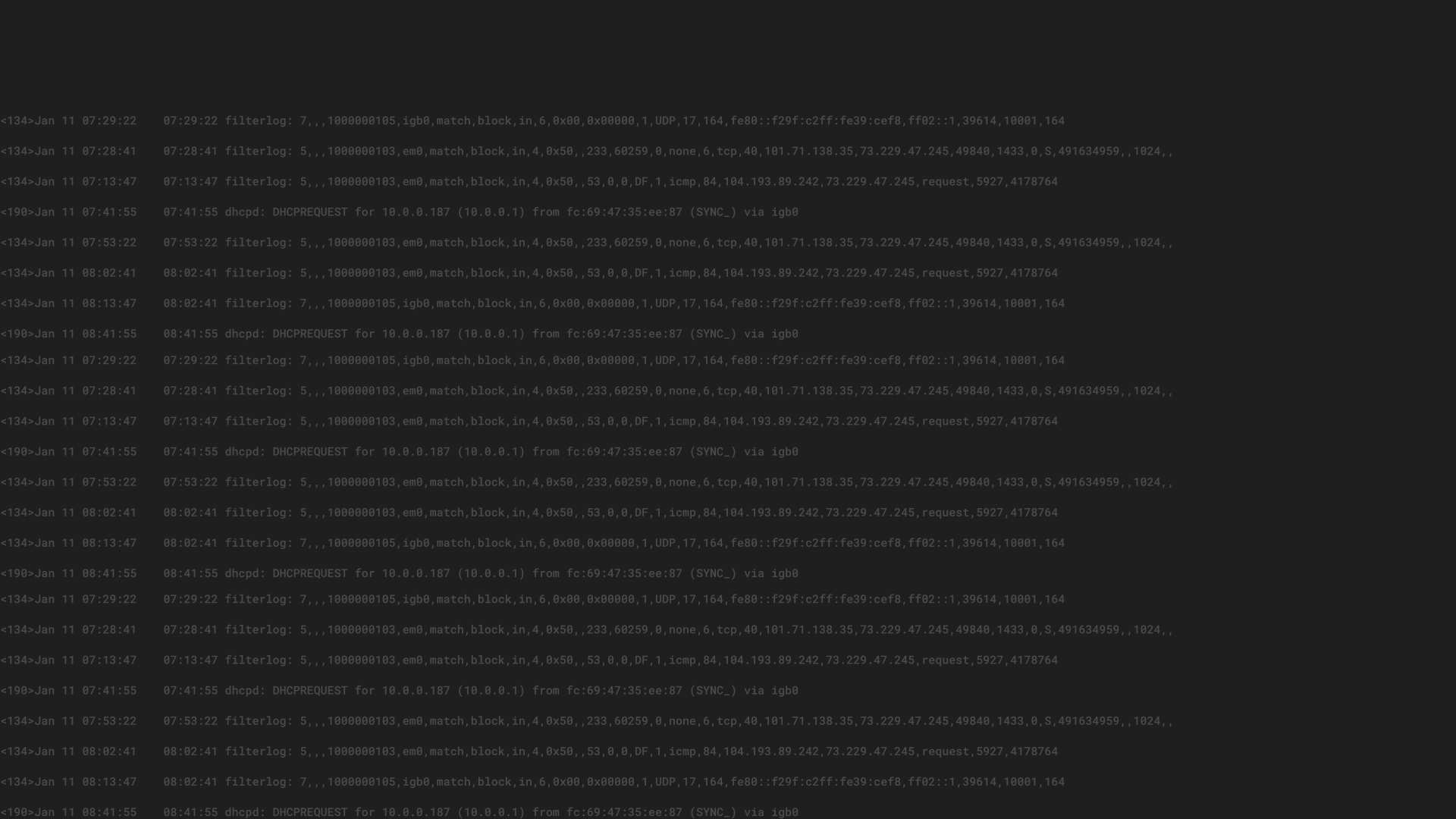

As an example, we have some log files that contain the status of services we are responsible for. Monitoring watches for failures and knows how to fix those failures. But security goes further and asks why did that failure happen? And how do I keep it from happening again?

Yes, it depends on your organization whether those functions are split into different departments and people, but the questions help you define what your company’s focus is. If you are focused on operations, both questions are important for you. But how can you answer them?

To monitor a service, you might be able to check with a specific request if something is working or not, but to answer the question about why something behaves in a specific way, you need to have data available. Just as tree rings give you information about trees and the natural environment around those trees, log files give you information about your IT environment. Having those logs centralized is essential to understanding your whole environment.

DETAILS MATTER

The easiest answer for why you should invest money, manpower, and other valuable resources in log management is that details matter. Some problems might not be a big issue if they happen on a single system. But what if this little error shows up on the complete fleet of servers? If the issue is critical, the alerting should push you out of the bed. But what if someone tries to break into your system and use your entire group of servers in a brute force attack? Would your monitoring notice and act? The other, perhaps more important, question: Do you think that you need to watch for such an issue? Isn’t that someone else’s problem?

A single line that indicates someone tried to log in to one of your servers does not matter– thousands of these can be found in every server log file. The key is to compare. Check if the same IP tried that on other systems. Check if the same user tried from a different IP. Suddenly it is important to compare data from different systems and ask the same questions. No need to have that data centralized? Do you check these on your fleet of servers? What do you need for that?

The key point is that you need access to the systems you want to check. You need a role that has the rights to read the files and you need to know where to find them. You have probably written some scripts yourself. Of course, you use a multiplexer to do the same action on multiple hosts at the same time. But what if you are on vacation and your colleague needs to do the same steps you do? Can they create the report or check the system? Do they have access to the same systems?

You can solve multiple problems if you run one system where all important data is present, one system where you can configure who is able to access what, and one system for your analysis.

Imagine speaking to someone who needs to secure their environment. Wouldn’t one of your first pieces of advice be to minimize the number of people who have access to your systems and restrict rights as much as possible to make those systems secure?

In this discussion, you could argue that developers and many other people need to access different parts of systems to do their jobs. This scenario makes for many possible targets and holes in your well-secured environment. Deployments should be done by your Continuous Integration and Continuous Delivery platform – no developer needs access to your production systems. Backup should be configured via your configuration management tool – if you log in to a server to manually configure something, you are doing it wrong.

If you set up your environment to use automation in this way, you’ll realize that some valuable information got lost–the log files and their content. The only viable solution for accessing this log data in a secure and consistent way is to build a central hub that can handle your system and application log files.

But you have more than just application log files. What else do you have in your environment? Your network equipment and storage systems also generate log files, and they all use the syslog protocol to send and structure the log files. Your intrusion detection system also probably has interesting data about the traffic in your network that can be contributed to the pool of information. Your electronic keys register records who accesses what room when and can write that to a central database. Your basement has a movement sensor to switch on lights…is that connected to some system? Do you have special hardware for your business that is able to write its status somewhere?

Mash Up Your Information

With all that data in a central system, you have a clearer window into what is going on in your environment–even the physical aspects. You might be able to spot activity such as a specific person opening a door, the light in the basement getting switched on by that movement, and a few minutes later, your central server reporting a broken RAID. Without the additional information from the central log management system, you could just find a day later that one disk is missing and order a new disk. But armed with the extra data, you know it looks like a specific person removed a hard disk and now you can investigate why.

The data collected from various log files around your IT landscape might not seem connected. It’s the central log management system that brings it all together to paint the bigger picture, creating knowledge to help you better protect your business and maximize your overall investment.