Getting the right information at the right time can be a difficult task in large corporate IT infrastructures. Whether you are dealing with a security issue or an operational outage, the right data is key to prevent further breakdowns. With central log management, security analysts or IT operators have a single place to access server log data. But what happens if the one log file that is urgently needed is not collected by the system?

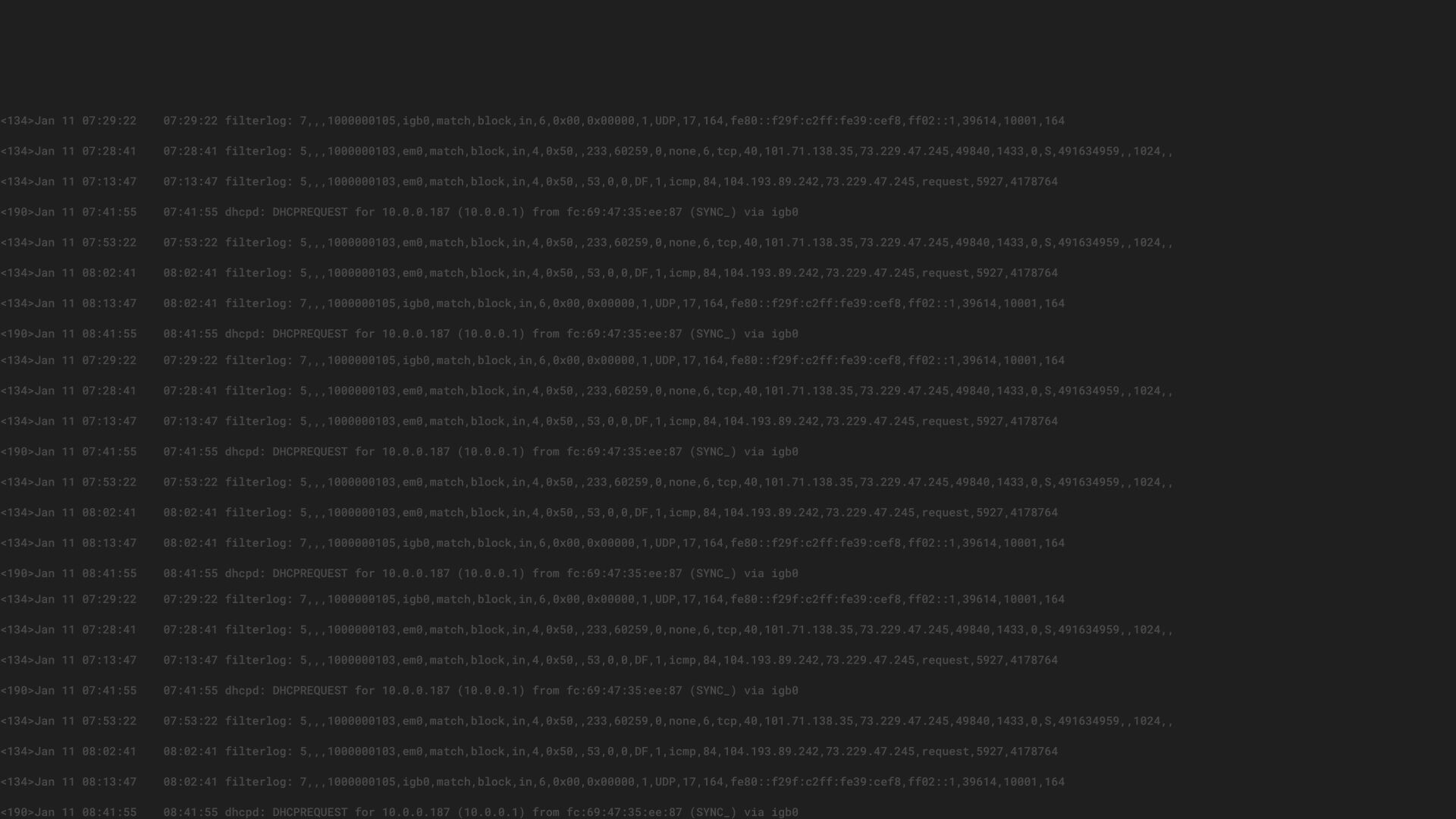

Another challenge is that it might not be clear which system is part of a problem. Maybe some abnormal behavior is detected on a server, but how do you know which service is part of the problem? In other words, where is log activity happening where there is usually silence? This uptick in activity could be an indicator of a security breach or an operational issue. For Windows shops, this could be a large amount of Windows event logs about a malware infected host trying to connect to a command & control server. On a Unix machine, it could be a brute-force attack trying to guess user passwords. A flood of login attempts would swamp the authentication log file. Or it could be simply a failed application that can’t connect anymore to its database so it logs countless error messages.

Generally speaking, some kind of log collector is reading log files, preprocessing the data, and forwarding everything to the central log server. These collectors need some configuration to know which files exactly they should observe for new log entries. If you are lucky, the collector configuration is deployed via a configuration management system like Puppet, Ansible, or Chef so that a change is automatically deployed to the fleet. However, getting a change into the collector configuration can be a tedious

task. Maybe another department is responsible for implementing the change. Maybe there is no direct access to the configuration management system for the security analyst. For various reasons, it can be complicated to get a small change implemented swiftly.

Graylog not only integrates access to log data, but also the settings for how the logs should be collected. The Sidecar is a small process running on the node machines that periodically fetches configurations from the Graylog server. In the case of a configuration update, the Sidecar renders the new file and restarts the actual collector with the given configuration. With this setup, it’s possible to react quickly to new requirements. If an untracked file is needed, a user can simply give the path to the file in the Graylog web interface and after just a few seconds, the data is available.

But the Sidecar doesn’t stop there. It also provides general metrics about the node it’s running on. For example, it can monitor a directory with log files and highlight the file that was most recently written to. So an administrator can see this and react if there are odd looking activities on a node. In the same way it collects general host metrics like CPU and disk utilization. So typical error cases like disks without free space or CPU-blocking processes can be debugged within Graylog.

On the server side the Sidecar is currently implemented as a Graylog plugin that ships a couple of REST endpoints. The node, on the other hand, connects to the server through the REST API. In the upcoming Graylog 3.0 release it will allow the administrator to use normal HTTP technology to connect all parts. Authentication via access tokens, encryption with HTTPS, and caching via ETags is all done with well known web technologies. Also we will extend the concept and make it significantly more flexible. With the new release, it will be possible to configure any option the actual collector supports, as well as integrating its own log collecting programs.