We would like to introduce a new series from our blog that takes you back to the basics of Graylog. Written by your Graylog engineers, these installments will be a deep dive into the main components of our platform ranging from installation and configuration to data ingestion and parsing, scaling, and more. This can be viewed in conjunction with and as an extension to the Graylog Documentation. We want you to be fully equipped with the knowledge to jump-start your installation and learn new tips for those that are Graylog pros.

Let’s get started!

OVERVIEW

In this post, we will focus on connecting Graylog Sidecar with Processing Pipelines. As a refresher, Sidecar allows for the configuration of remote log collectors while the pipeline plugin allows for greater flexibility in routing, blacklisting, modifying and enriching messages as they flow through Graylog. We will use filebeat to send log files over to Graylog. Next, we will add enhancements so that we will be able to utilize Graylog’s advance search query features.

For this essential task of getting remote log files and parsing, we will use the not-so-standard MySQL slow query log file as an example. When we speak of MySQL, you can abstract that for any available fork that uses the default MySQL Slow Query Log.

GETTING THE LOGS

To collect the logs, we are going to install Graylog Collector Sidecar on the Server where MySQL is running. As a refresher, the Graylog Collector Sidecar is a lightweight supervisor application for various log collectors. It allows you to centralize the configuration of remote log collectors. The Filebeat Collector is included and does not need additional installation or configuration.

You should configure the Sidecar with your Graylog API URL, a speaking node_id and create a tag mysql that we will use later. My node_id during this setup is named horrex.fritz.box so you can identify where you should see your node_id .

If you have not done so already, install the Graylog Beats input plugin on your Graylog Server and start a Beats Input. We will assume that this Input is named Beats and is listening on Port 5044.

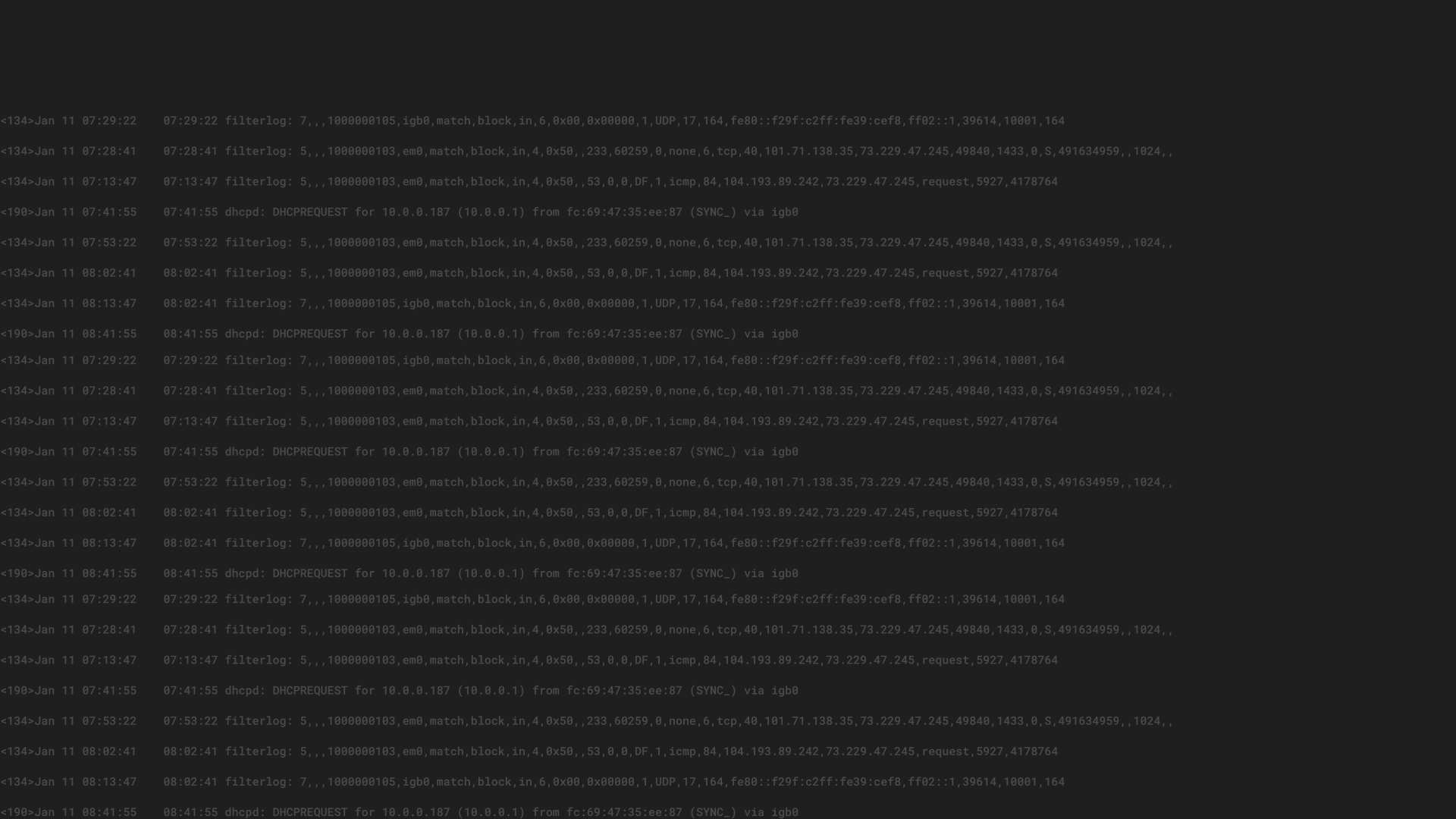

Now we are going to configure the Collector to obtain the MySQL slow query log files. Navigate to System / Collectors in your Graylog web interface and you will notice that the Collector is already present, but has a status of “failed.” Do not worry, this is just because we have not configured anything yet.

In order to better understand the next step, know that you do not configure one specific Collector Sidecar in Graylog. Instead, you will create a configuration and assign a tag to it. If this tag matches to a tag that is configured on any available collector, this configuration will be used on the server where the Collector Sidecar is running. Returning to our example, we will create a configuration for the tag mysql.

Following our step-by-step guide in the Documentation, we create a new configuration and give it the name mysql. Then, create the Beats Output with the type filebeat and your Graylog Server IP or name and the Port 5044 in the hosts field.

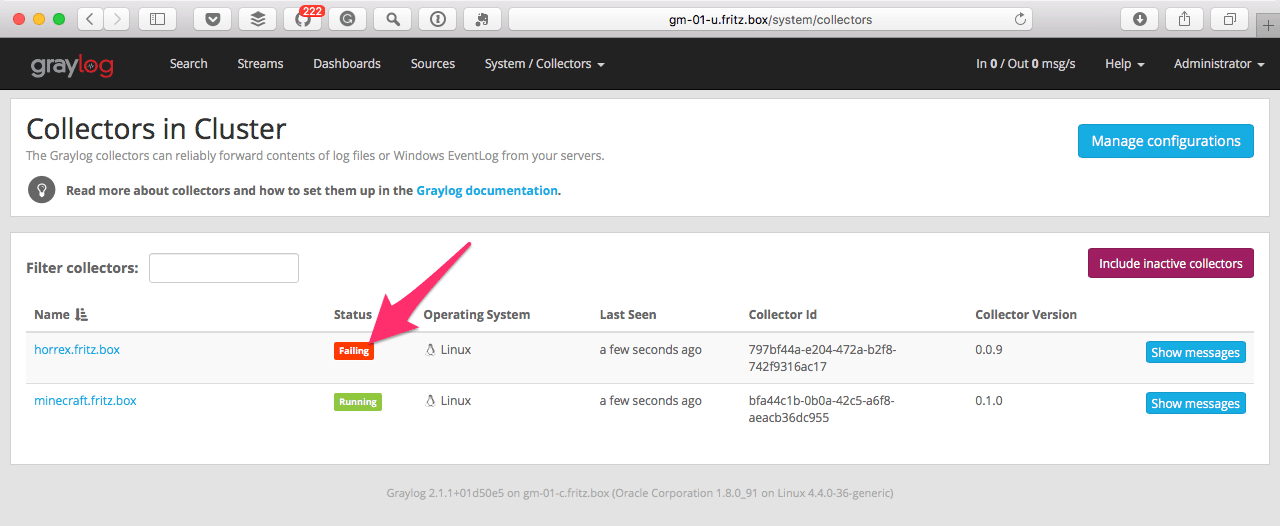

Once completed, we are ready to give the Collector the information on where it can find the logfile and what should be done with it. To do this, we will create a Beats Input.

Lets dive into this a little deeper. You will need to name it and then choose where it will be forwarded. You will also need the Path to your Logfile. On our system it is /var/log/mysql/mysql-slow.log. The Type of input is mysql-slow. This way, we will have the option to search for this input type and do modifications only with these messages. Note, the checkboxes need to be marked. Lastly, the Start pattern of Multiline message is ^#|;$ and now we can save.

Lastly, we add the tag mysql to the configuration.

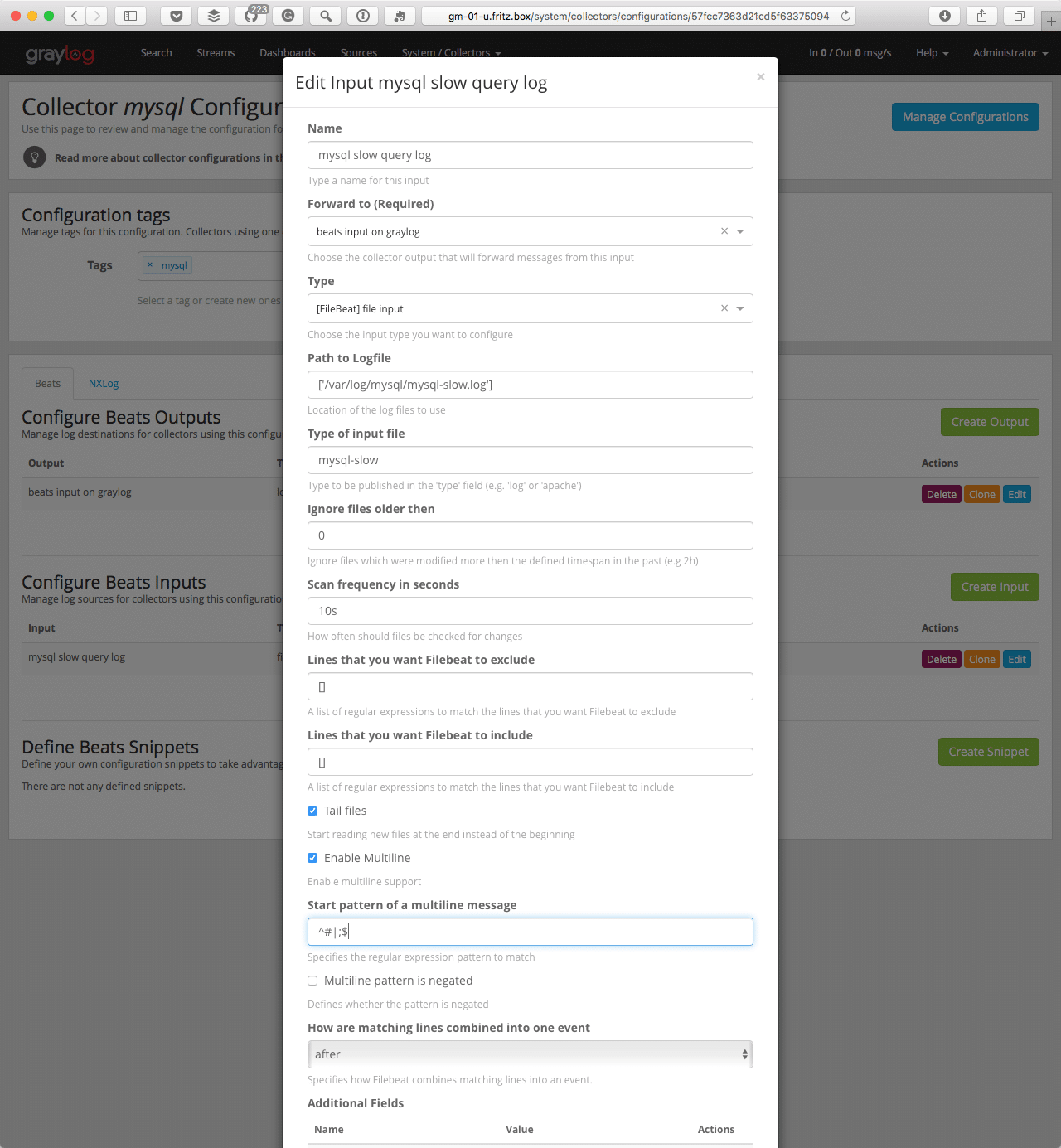

Now when we return to the Collector Page, the status of our collector is ‘running.’ All new messages will be visible in Graylog.

Next we process these messages.

PROCESSING PIPELINES

The main goal of processing is to extract information to be able to modify or enrich and have them available to use in a Dashboard.

We will create a Processing Pipeline rule to extract the information out of the Multiline Message.

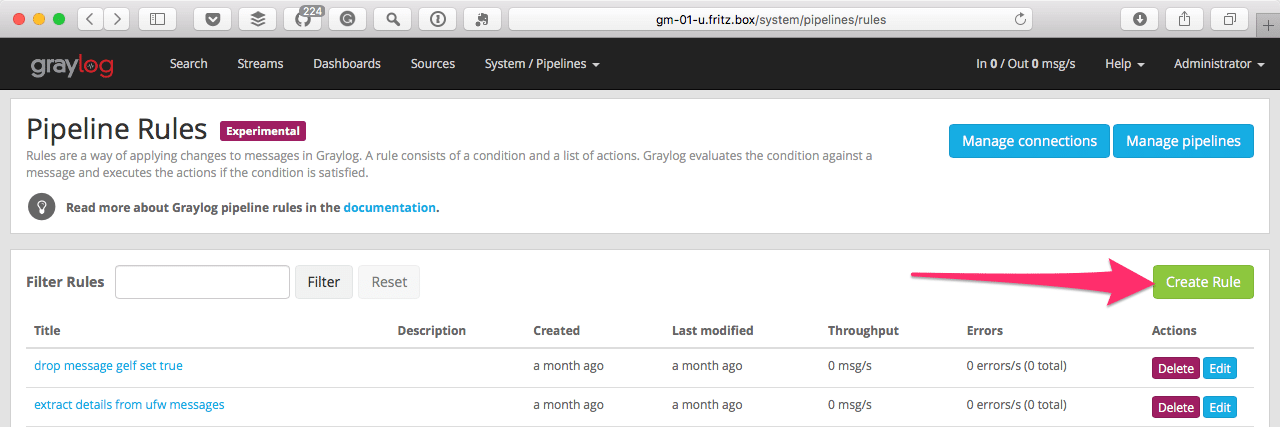

Everything starts with a rule. To create a Pipeline rule, Navigate to ‘System > Pipelines’ and select ‘Manage Rules’. This is where you create a new rule.

Choose a description for this rule that explains its purpose. See our example below:

rule “mysql: extract slow query log”

when

// check if field “type” is present and if yes if the content is “mysql-slow”

// only if this two conditions are true this rule will continuehas

string($message.type) == “mysql-slow”then

// get the message in a string into a variable to work with

let message

// thanks to https://marketplace.graylog.org/addons/39986996-08dc-4ea7-8154-a4cca889d2b0

// Grok Pattern is used to get fields and values

// only fields that are named will be in the final message

let action = grok(pattern: “(?s) User@Host: (?:%{USERNAME:mysql

// all extracted fields will be written to the message

set_fields(action);end

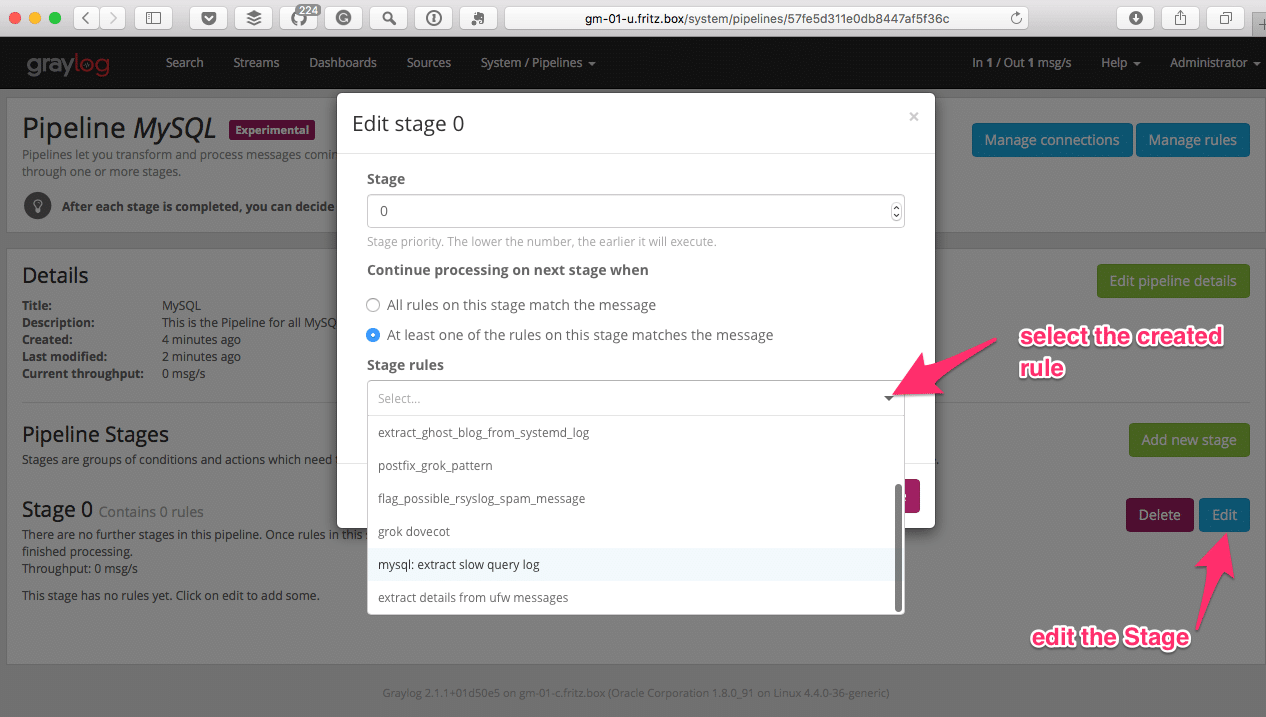

Next we need to make this rule present in a Pipeline so Manage pipelines on the top right is our next step. We need to add new pipeline then give it a name and a description. Then, edit Stage 0 and select our previously created rule mysql: extract slow query log and click save.

The final step now is to connect this created pipeline to our message streams. To do this, select Manage connections in the top right.

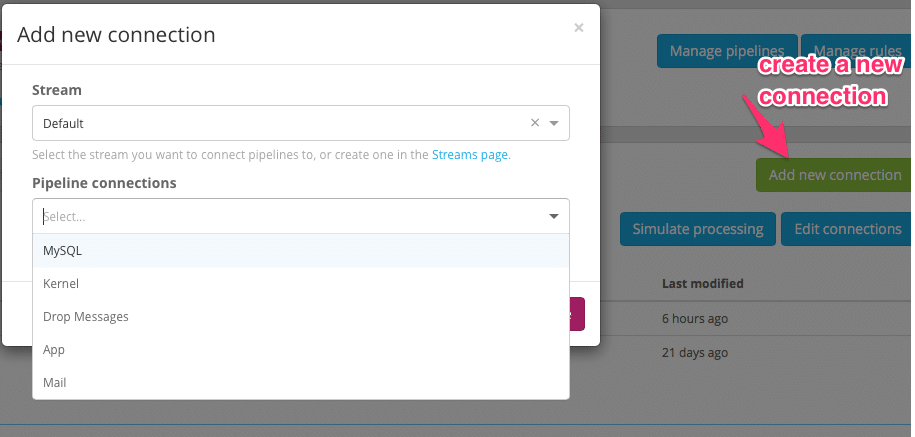

Now create a new connection. If this is your first Pipeline Connection, you can choose the Default Stream and your newly created Pipeline. Once you hit the save button, your Processing Pipeline will start to work.

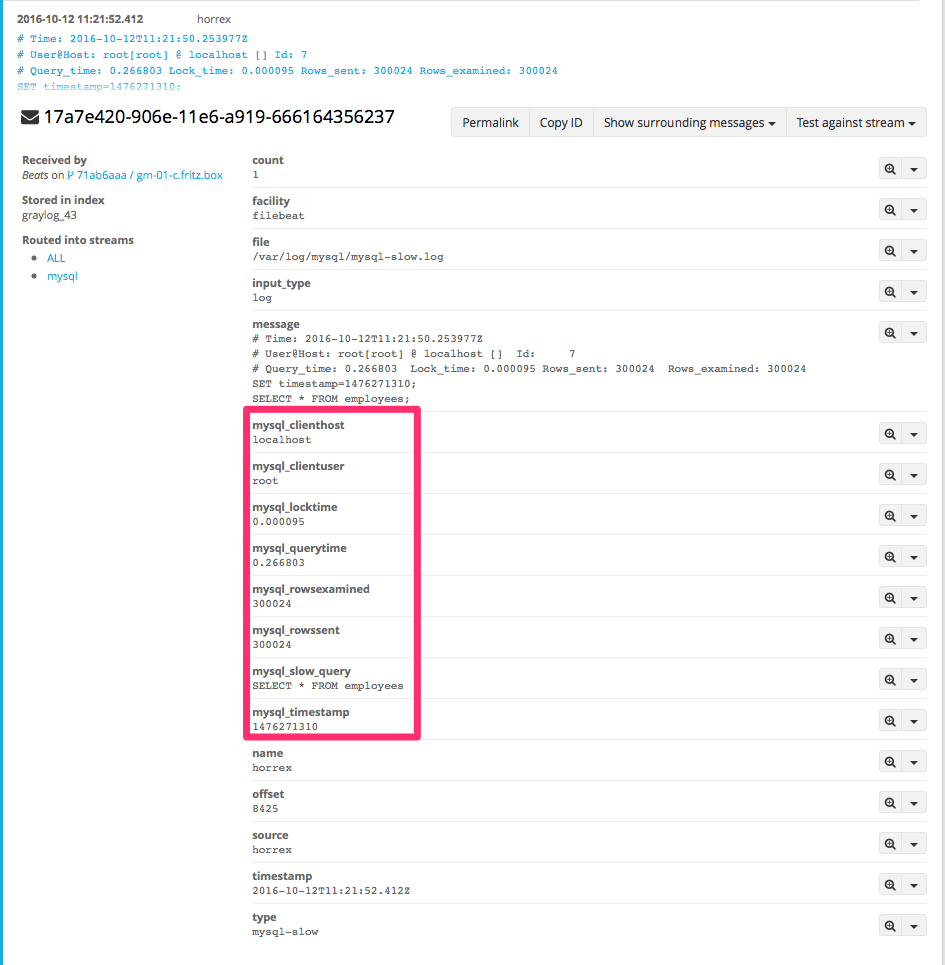

The result is that your final message in Graylog looks like the following – now you are able to put the values into a Dashboard or do some search queries on the newly added fields.

Stay tuned for our next post where we will walk through the process of expanding your single Graylog Server into a Graylog Cluster. Please be sure to follow us on Twitter and sign up for our newsletter to be alerted of the next release!

Happy Logging