Many organizations today have strict data privacy regulations that they must comply with. These privacy regulations can often clash with the requirements of security, application and operations teams who need detailed log information. This how to guide walks you through redacting message fields for privacy purposes.

At Graylog, many of the organizations who use our tool are logging sensitive data that may contain personally identifiable information, health related data or financial data. Often, to ensure compliance with data privacy laws, this information must be redacted or hidden from many of the end users of the tool.

I’m going to walk through a simple way we can use processing pipelines to scrub personally identifiable information from a log message so that it is only visible to an elevated Graylog user account.

Caution: To achieve this functionality we need to replicate the message. This will increase the amount of data written to OpenSearch which may impact licensing or storage requirements.

Configuration

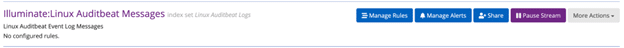

In my lab environment I have Auditbeat running on my host machine.. Log messages are sent to a Graylog Illuminate stream called “Illuminate:Linux Auditbeat Messages”.

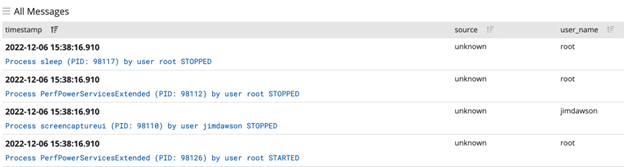

In these messages I can see my username. First in the user_name field and again in the message field.

Pipeline Rule

For privacy purposes I am going to redact these usernames and route the messages into a separate stream, “Auditbeat Redacted”. I’ll retain the unredacted message in the “Illuminate:Linux Auditbeat Messages” stream. We’ll then restrict the access rights to these different streams.

To achieve this we need to write a pipeline rule that will create a copy of the message, edit the contents, route it into the new stream and remove the copy from the original stream.

This is what the complete pipeline rule looks like, I’ll walk through it line by line:

| rule “redact_usernames” when // check whether the message has the username field and hasn’t already been redacted has_field(“user_name”) AND NOT contains(to_string($message.user_name), “REDACTED”) then // clone the message let cloned_mess = clone_message(); // grab the username and replace it in the message component let x = to_string($message.user_name); let new_field = replace(to_string(cloned_mess.message), x, “REDACTED”); set_field(field: “message”, value:new_field, message:cloned_mess); // replace the username field with REDACTED set_field(field:“user_name”, value:“REDACTED”, message:cloned_mess); // route into Auditbeat Redacted stream route_to_stream(id:“637e24115833463dd73bf617”, message:cloned_mess, remove_from_default:true); // remove from original stream remove_from_stream(id:“638f5d7cacb74d540a215aa9”, message:cloned_mess); end |

Identify The Message

The first step in the rule is to identify the messages we want to modify. This is achieved by finding messages with the relevant username field and also performing a check to ensure the message hasn’t already been modified. This check is important and I’ll explain why in the next part:

| when // check whether the message has the username field and hasn’t already been redacted has_field(“user_name”) AND NOT contains(to_string($message.user_name), “REDACTED”) |

Clone The Message

After we have identified the message we want to process we then clone the message.

IMPORTANT: When a message is cloned an exact copy of the message is created however it will be given a new message ID. From the view of the processing pipeline, this message has not been processed so it will flow through the pipeline as a newly seen message. If the check in the previous block was not performed, we would end up in an infinite loop of cloning the same message:

| // clone the message let cloned_mess = clone_message(); |

As the message field in the log contains the username, we are going to first redact it from here, before removing it from the auditbeat_user_name field itself. I am using the original $message field to find the username, but then replacing the the message field in the cloned message, cloned_mess:

| // grab the username and replace it in the message component let x = to_string($message.user_name); let new_field = replace(to_string(cloned_mess.message), x, “REDACTED”); set_field(field: “message”, value:new_field, message:cloned_mess); |

We then replace the username field with “REDACTED”:

| // replace the username field with REDACTED set_field(field:“user_name”, value:“REDACTED”, message:cloned_mess); |

Stream Routing

Before routing and removing from the relevant streams:

| // route into Auditbeat Redacted stream route_to_stream(id:“637e24115833463dd73bf617”, message:cloned_mess, remove_from_default:true); // remove from original stream remove_from_stream(id:“638f5d7cacb74d540a215aa9”, message:cloned_mess); end |

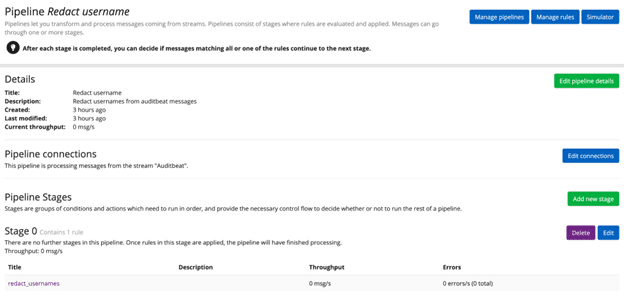

Once we have written the rule, we need to apply it to our Auditbeat stream. Create a new pipeline, ensure you have selected the relevant stream in the Pipeline Connections, and apply the rule at an appropriate stage. In my case I only have 1 rule so I am applying it at Stage 0:

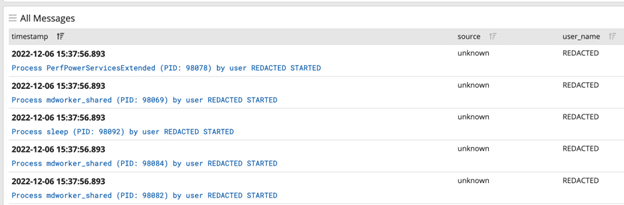

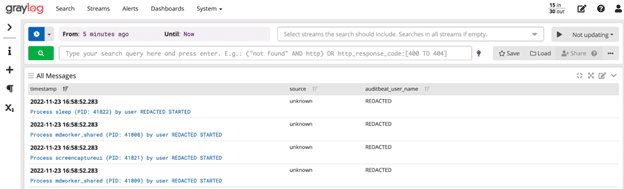

Search And Share

If we now go to the Search page, we should be able to see the redacted and non-redacted fields when switching between the Auditbeat stream and the Auditbeat Redacted stream:

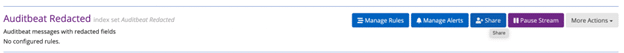

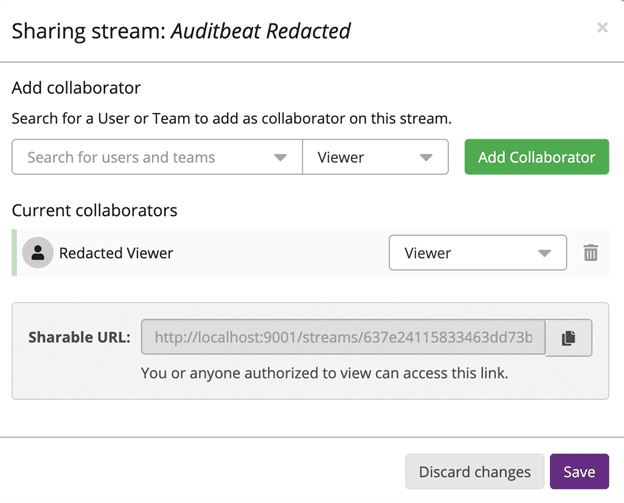

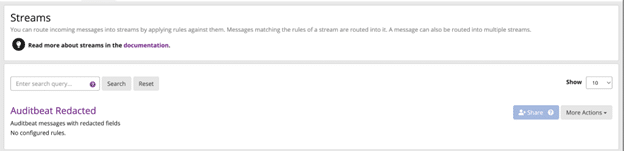

We can now share these streams out with the relevant user accounts. In my example I have created a test account of an analyst who is only allowed to view the REDACTED stream. On the Streams page I can click on Share and assign this user Viewer rights to this stream:

If we log in under this user, you can see that they only have access to the Auditbeat Redacted stream:

Additional Thoughts

Finally, with Graylog Operations and Graylog Security, you will be able to audit which users are accessing sensitive data inside of Graylog for even more control and oversight.

As you can see, processing pipelines are a very powerful way to modify, enrich and filter your log messages. If there are particularly novel or complex pipelines that you think would be useful to the rest of the community, please share them on the Graylog Marketplace.