Recently, I explored the case for Graylog as an outstanding means of aggregating the specialized training data needed to build a successful, customized artificial intelligence (AI) project.

Well, that’s true, of course. My larger point, though, was that Graylog is a powerful and flexible solution applicable to a very broad range of use cases (of which AI development is just one). This is because Graylog gives organizations faster, more automated, and more centralized insight into what’s happening in the IT infrastructure, what that means, and what to do about it than most organizations have ever had.

Let’s explore some scenarios to illustrate what I mean by that. One important point: these are all directly inspired by real-world situations that were reported to us by Graylog customers. But while they vary enormously from each other, they still only scratch the surface of the value Graylog can create.

Demonstrate regulation compliance and address PR challenges

Picture the following scenario: a privileged insider such as an IT director leaves your company. Subsequently there are media reports that the company was hacked (which are correct) and that certain types of sensitive customer data were accessed by a criminal organization. A predictable PR storm follows.

The company is now in an extraordinarily defensive situation, having to respond to inquiries from both the media and current or prospective customers. It must address all kinds of concerns and answer many questions which may or may have a rational or factual foundation. Everyone involved, including company executives, wants to know exactly which information was hacked, what the consequences might be, and what’s being done about it.

Making this situation still worse: the IT director who quit shortly before the hack reaches out to the media, claiming that the problem was even worse than has been reported. Based on direct personal experience, or so his account goes, much more sensitive data was accessible to the hackers, and the odds are good it was accessed too.

On the heels of all this, the state attorney general expresses concern, reaches out to key executives, and it appears likely a formal investigation will follow. Subsequent lawsuits by various customers appear inevitable. Federal issues may come into play if it turns out regulations pertaining to data management were violated. The full impact of all this on the company’s brand is hard to assess, but it’s likely to be enormously negative.

Fortunately, the new IT director, fully cognizant of Graylog’s power to aggregate and analyze relevant logs from all the applicable systems, is able to demonstrate that the evolving media narrative is in fact completely false.

While it’s true customer data was accessed, Graylog analysis shows that that data was only a tiny percentage of the whole. In fact, it was only three hundred customer names and their contact information, and it did not include their credit card numbers. Furthermore, all three hundred have already been notified of the full extent of their exposure. This means the former IT director’s speculation in the media was simply not accurate, and the true extent of the problem was less than had been reported — not greater.

Such an objective, data-driven conclusion satisfies both the media and the attorney general, and they collectively lose interest in any subsequent investigation. The federal government never becomes involved at all. And the potential customer lawsuits that seemed so likely to emerge… don’t.

What Graylog delivered in this daunting scenario, therefore, was the power to shape the public story through irrefutable evidence, shut down an emerging government investigation, and reassure and preserve the customer base. At a business level, such an outcome, given such a problem, reflects really extraordinary value.

Troubleshoot or optimize DevOps projects such as micro services

Micro services are among the more interesting and promising developments in enterprise software in recent years. Yet they also bring new challenges of various kinds, not least among them challenges involving process transparency and centralized management.

Fortunately, it’s in just these areas that Graylog can deliver new power.

How? Begin with the basic concept of micro services as a logical evolution of Service Oriented Architecture (SOA). Essentially, the idea is that instead of services being driven by large monolithic applications created by a large development team, services are instead made up of much smaller services (micro services) that deliver a logical subset of the total functionality. These are loosely coupled, lightweight, and rely on a small number of standard protocols to route, manipulate, and manage data as it moves through the service as a whole.

Beyond the tech ramifications, the organizational ramifications are substantial too. That’s because each of these micro services can be developed by its own very small, very agile development team, operating independently of all the others and yet in full confidence that the project as a whole will work as intended. Such an approach gives the organization exceptional new power to optimize or scale any subset of the service, via the smallest, most efficient allocation of requisite resources, as opposed to having to scale the entire codebase. It also means code can more easily be reused, and the company can stop reinventing the wheel unnecessarily.

The management challenges of a micro services implementation, however, often become complex due to the diversification involved. Instead of one codebase there are many. Instead of one development team, there are many. And quite often, instead of one system or logical cluster of systems that deliver the service, there are many, distributed unpredictably across a large and complex total IT infrastructure.

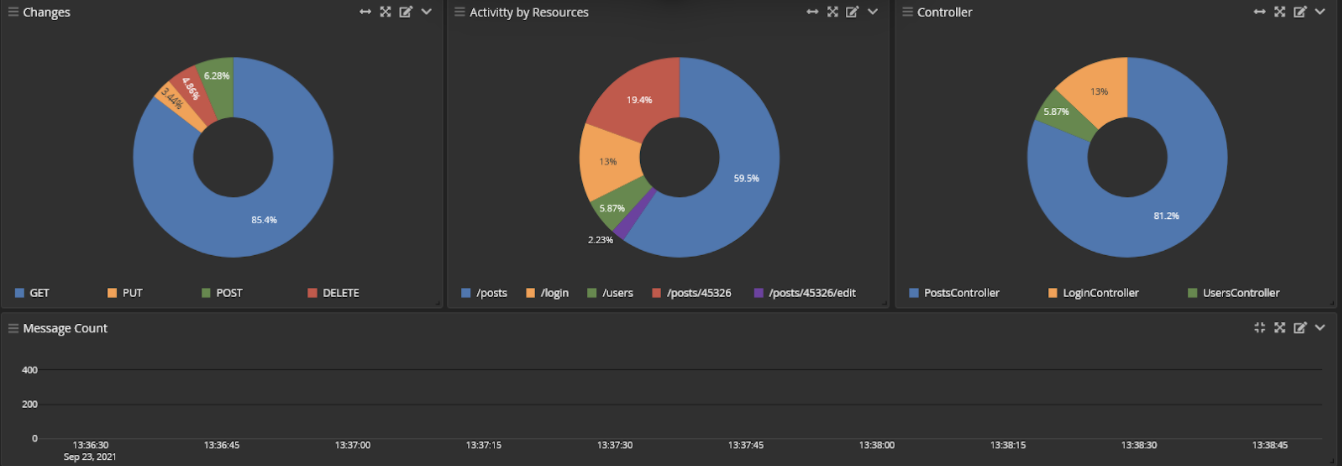

What can Graylog do to simplify such management issues? Exactly what you’d think: it aggregates the relevant system and application logs, centralizing them for analysis, visualization, and reporting. It brings micro services, however complex, under a single pane of management glass.

This means the technical complexity of the micro services implementation is abstracted away. What’s left behind is the data needed to understand and improve performance, all of which is now in a single logical place (the Graylog repository).

Queries can then be run against that data to determine whether, and why, performance shortfalls occur, which error conditions apply (or don’t), and whether discovered root issues are due to the production environment (handled by Ops) or the code itself for any given micro service (handled by Dev). If the problem is in fact the code, then isolating the specific micro service responsible is fairly simple as a result. So is handing off the problem to that micro service’s development team along with any applicable logs and notes.

In this context, then, Graylog is essentially the crystal ball needed to answer key micro services questions that pertain to Dev, Ops, or both (and such questions are constantly coming up). Micro services, in consequence, are more likely to deliver the expected value, perform better while running, and when problems do occur, tracing and resolving those problems is a relative piece of cake compared to a Graylog-less scenario.

Maximize business and service resilience

Beyond micro service performance degradation, what happens when entire services fail outright? The speed and accuracy of the troubleshooting and resolution process can make a tremendous difference in cases where a service is high-priority. Graylog both accelerates and informs that process in a wide variety of ways.

An inventory service used by an online retailer, for instance, will surely be stressed during holiday season when consumer purchases spike by an order of magnitude. If that service completely fails, customers literally can’t look up and buy what they want to buy. The business impact will be devastating for every hour that such a situation persists. Every day of downtime could easily cost the company millions.

It’s also sometimes the case that a service failure appears to be of one type, but is actually another type altogether. If the organization can’t easily distinguish the one from the other, that confusion will inevitably slow a proper response and magnify the business impact even more.

Suppose a public-facing Web application stops responding to customers because it’s having to deal with ten or twenty times more incoming requests than its infrastructure was ever designed to support.

What caused that kind of problem? Is it, as some would assume, due to a distributed denial of service (DDoS) attack conducted by hackers? That’s certainly the way DDoS attacks often manifest, after all. They simply overwhelm an outward-facing resource like a Web site.

However, before IT jumps into action based on that assumption, it might be best to investigate (very quickly) the various alternative possibilities. Graylog, due to its feature set and exceptional performance, is a great way to conduct such an investigation.

It was in just such a situation that one Graylog customer found that it wasn’t a denial of service attack at all. Instead, it was simply a misconfigured Apache web server owned and managed by the same company. Graylog made this apparent by providing instant access to logs that revealed the incoming Web application requests all had the same IP address.

Subsequently, it became clear that that address fell within an IP range owned by the company. It was then an easy matter to use Graylog to pinpoint the exact server involved, which as it turned out had just received an improperly installed security update, which in turn created unwanted side effects (to say the least). The DDoS nightmare the company feared turned out not to exist at all, and the Web application was restored to proper working order very quickly.

In general, Graylog is a superb tool to keep IT apprised of service, application, or Web site status. It’s easy to configure it to look for and track a change in responsiveness for any subset of the infrastructure deemed high priority. Graylog becomes, in this context, a window into performance that can be raised or lowered to suit the requirements, delivering the right information at the right level of detail. IT can, in looking through Graylog, observe the whole technical forest… or a subsection of the forest… or individual trees, whichever perspective is most relevant under the circumstances.

Graylog can also be configured to take action if service responsiveness falls below a certain threshold, or disappears altogether. That might mean sending e-mails to appropriate team members, or dialing phone calls, or even generating text alerts.

And beyond this functionality it would also be possible to configure Graylog in more sophisticated ways. For instance, if a primary server became unresponsive, Graylog could be configured to send an activation message to a failover server, bringing it online, and another message to reconfigure a router to redirect incoming traffic. Finally, it could notify the IT team that all this had taken place so the team could investigate and resolve the original issue.

If you’re facing unusual challenges and you’d like to learn how Graylog can help, feel free to reach out to us — we’re always glad to explain key features that may apply and answer any questions.