Google Cloud Logging is an essential service for managing, analyzing, and troubleshooting logs generated from various Google Cloud services and applications. It offers a centralized platform for collecting, processing, and storing logs, making it easier for users to monitor and understand their system’s behavior. With the increasing complexity and scale of modern cloud-based applications, logging has become more critical than ever before. By logging Google Cloud logs, users can gain valuable insights into their system’s performance, detect and diagnose issues quickly, and ensure compliance with regulatory requirements. In this context, logging has become an indispensable tool for maintaining the health and reliability of cloud-based systems, and organizations must prioritize implementing effective logging practices.

We have had a few people ask this recently and they have seen that Graylog has the Google input (https://go2docs.graylog.org/5-0/getting_in_log_data/google_input.html) but aren’t quite sure exactly what is pulled in from the Google Cloud Platform (GCP). This article will cover this and provide a step by step guide on how to set it up in your environment.

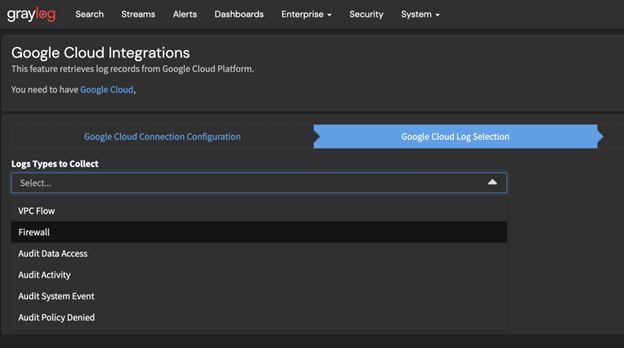

We will focus on the audit logging of Google cloud itself, however, the input supports the below sources when it refers to Google Cloud Logs.

- VPC Flow Logs (https://cloud.google.com/vpc/docs/using-flow-logs)

- Firewall Logs (https://cloud.google.com/vpc/docs/firewall-rules-logging)

- Admin Activity Audit Logs (https://cloud.google.com/logging/docs/audit)

- Data Access Audit Logs (https://cloud.google.com/logging/docs/audit#data-access)

- System Event Audit Logs (https://cloud.google.com/logging/docs/audit#system-event)

- Policy Denied Audit Logs (https://cloud.google.com/logging/docs/audit#policy_denied)

Note: the Google input also supports Google Workspace and Gmail log events, however, that is not being covered in this article.

How does Google Cloud Log?

Logs and Buckets

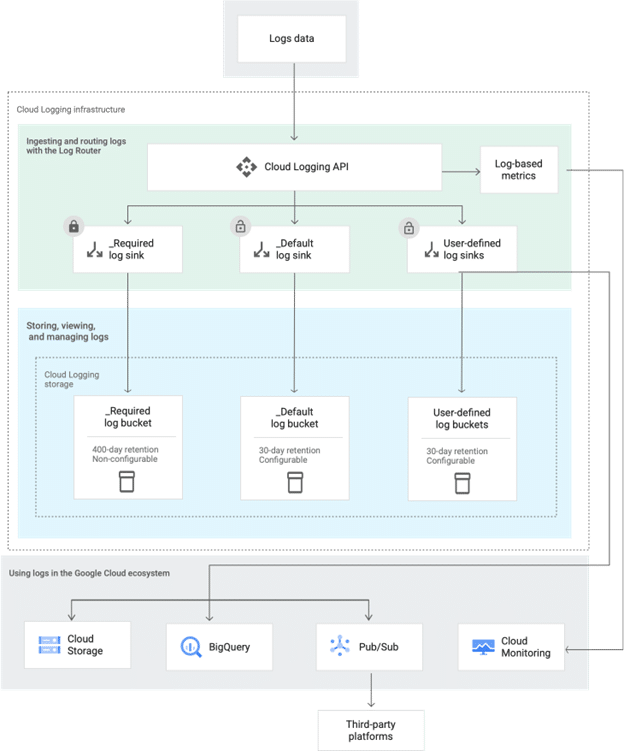

Before we start to set up the input it’s good to know how it works, first let’s cover a brief overview of Google Cloud Logging. In general, logging is made fairly simple in GCP. At a high level, logs are routed into buckets within the logging service. Logs can also be routed to additional destinations such as Google BigQuery.

Let’s dive into a bit more detail. As I mentioned, logs are stored in log buckets in the cloud logging service. Logs that are enabled by default are stored in a log bucket called _required for 400 days which is not configurable, the logs which are routed to this by default are:

- Admin Activity

- System Event

- Access Transparency logs.

Logs for Different Services

Your logs can be enabled for various services or auditing, in general, such as data access logs are routed to the _default log bucket which if left unchanged stores logs for 30 days.

Log Routing

Routing is the process of getting logs from point A to point B. When talking about logging in Google Cloud there is a process for routing logs to not just custom log buckets but other destinations such as Pub/Sub topics, BigQuery datasets or Cloud Storage buckets. This process uses a concept of sinks, sinks essentially act as routers for the logs, they work independently of each other (so no rule conflicts) and can use inclusion or exclusion filters to filter which logs get processed and sent to a destination.

This logging process is visualized in the below image provided in the Google documentation (https://cloud.google.com/logging/docs/routing/overview)

To summarize with this additional context, Google Cloud stores logs in log buckets within the cloud logging service. By default, all logs are routed to one of two buckets and that specific logs can be routed to additional destinations outside of the logging service such as Pub/Sub topics, BigQuery datasets or Cloud Storage buckets using sinks.

The last important thing to know is that the logging service works at an organizational level, this allows logs in Google cloud to be aggregated across all projects in an organization. Pretty neat!

So what does the Google Input do?

How does Graylog use Google Cloud Logging to ingest these logs then? Well, the short answer is it routes logs based on inclusion filters to a BigQuery Dataset which the input periodically pulls data from and cleans up. I won’t explain what BigQuery is as I did with cloud logging, but just know it’s a Google managed data warehouse service which you can read up about here: https://cloud.google.com/bigquery

So what the input does is:

- Use a service account which needs to be created ahead of setting up the input

- The input checks if a BigQuery Dataset called “gcpgraylogdataset” exists, if it does not it will create it.

- Note: currently the input does not support selecting a specific region for the BigQuery Dataset, it will be created as a multi-region US resource (there is a workaround to this which I will explain at the end of this article)

- The input checks if the “gcpgraylogsink” sink exists in the cloud logging service, if it does not it creates this and sets the inclusion filters.

- The input then starts polling periodically. It pulls data from the BigQuery table. Once the data has been pulled and sent to Graylog’s on-disk journal, The input will remove the processed data from the BigQuery dataset.

We now understand how logging works in Google Cloud, we know that the Graylog input uses sinks in the logging service to route logs based on include filters to a BigQuery Dataset which is where the input pulls the logs from.

Let’s configure it

Setting up the prerequisites

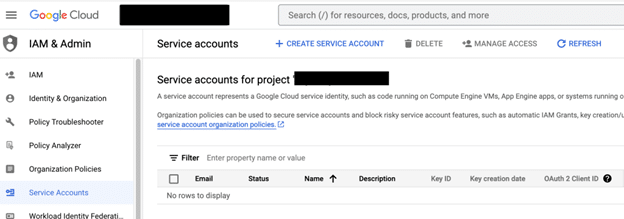

We need to first create a service account (https://cloud.google.com/iam/docs/creating-managing-service-accounts) which the input will use. If you are unfamiliar with GCP this can be done in the IAM & Admin section where Service Accounts is a section and there is a Create Service Account button which will launch a creation wizard.

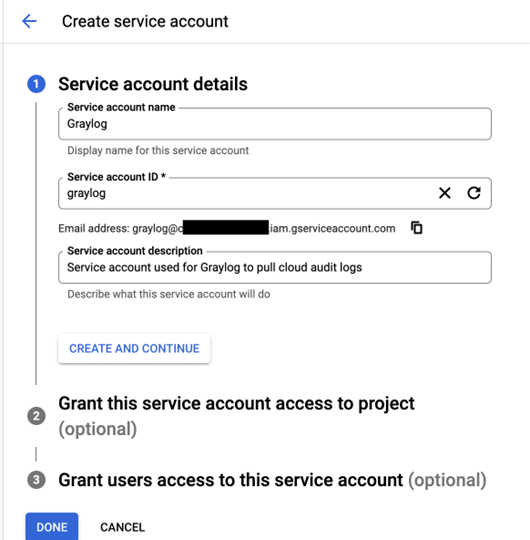

Create Name and Description

In this wizard simply give the account a name and description.

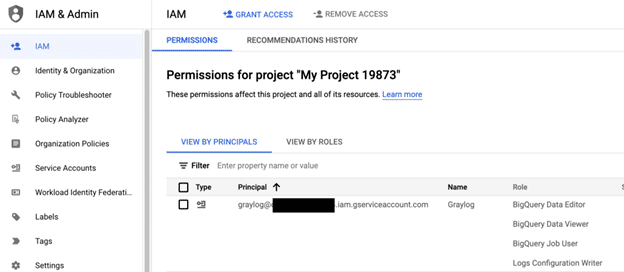

Permissions

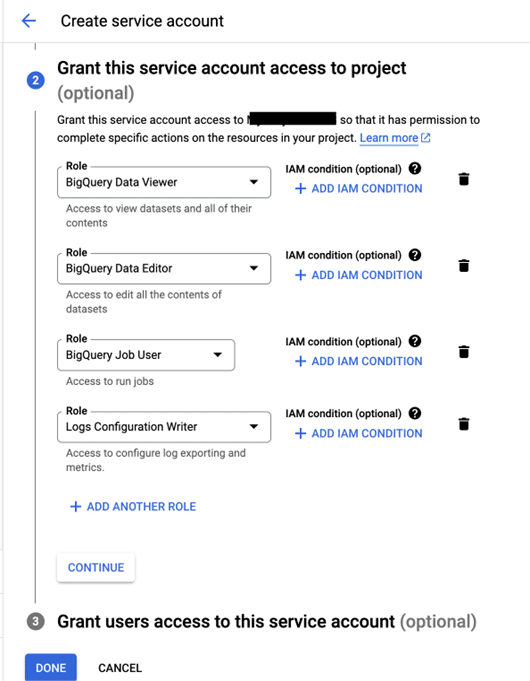

Assign it with these permissions:

- BigQuery Data Viewer

- BigQuery Data Editor

- BigQuery Jobs User

- Logs Configuration Writer

You can now select done and verify the permissions of the account within the IAM section.

Success!

We have created our account and assigned the right permissions, now we need to enable Graylog to utilize this account.

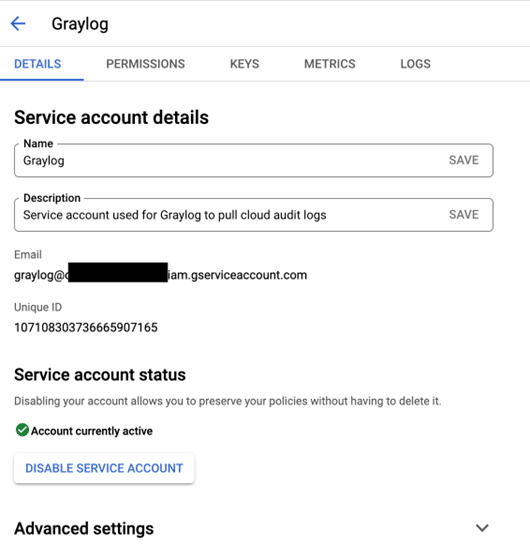

Generate a Key File For Graylog

We need to generate a key file for Graylog, go back to the service accounts section and click on the new service account which will take you to the account details panel, now is a good time to write down the unique ID of the account as you will need this for the input creation in Graylog.

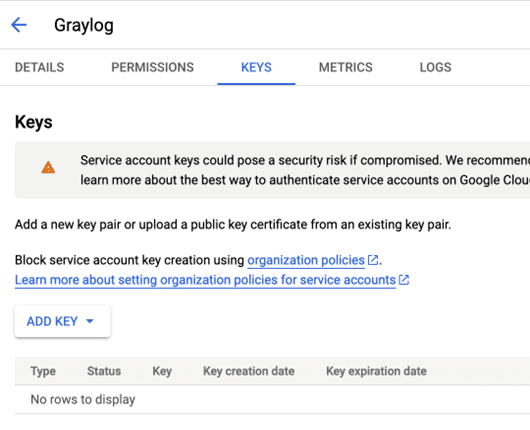

Add a new key

Go to the KEYS tab and add a new key.

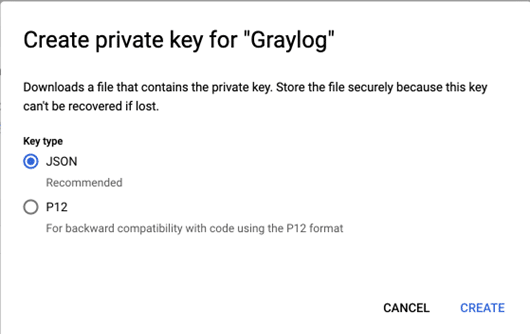

Create JSON Key

Create a new JSON Key file and store it somewhere safe.

Store the Key

Where you need to place this key will depend on if you have a Graylog Cloud, self-managed deployment, or you are just making use of Graylog Forwarders.

If you are running your inputs on the Graylog server itself or using a self-managed deployment, then you must place this key file on the file system of the Graylog node the input is running.

If you are a Graylog Cloud user or utilizing Graylog Forwarders then you must place the key file on the file system of the forwarder host where the input is being run.

Move the key file from the location you saved it to the host where it needs to be and then access your Graylog instances web UI.

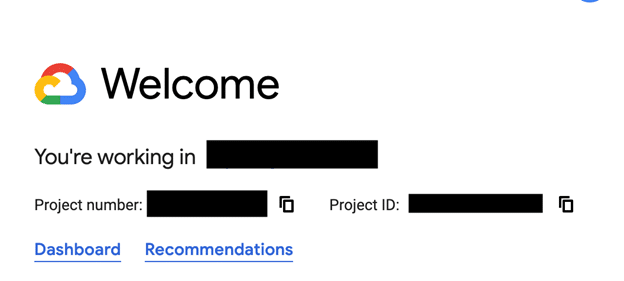

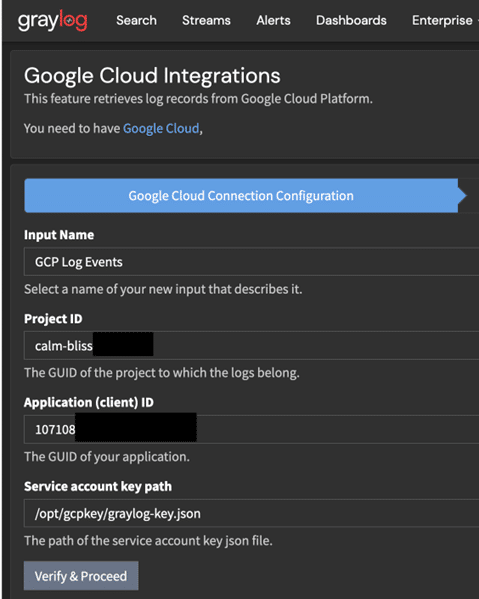

Note the Project-ID

Before you do anything though, you need one last key bit of information from your GCP console. You need the project-id. You can find this on the homepage of the console (you can access this by clicking the Google Cloud icon in the top left) it is the Project ID value you require not the project number. Add this value to your note with the service account unique ID you noted down earlier.

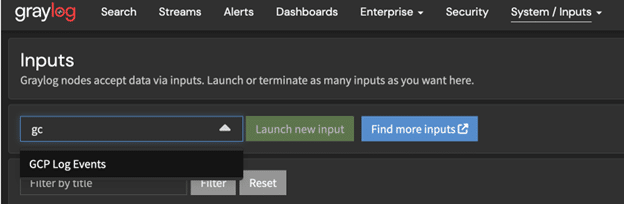

Create the Input on Graylog

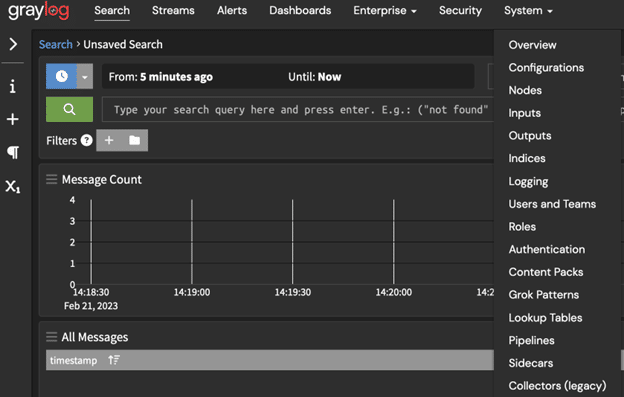

We are now ready to deploy and start our input in Graylog. We need to navigate to the inputs page, users utilizing forwarders will need to go to the input profile assigned to their forwarder to do this.

The inputs page can be found in the System menu. The forwarders can be found in the Enterprise menu.

You will want to launch a new GCP Log Events Input.

Now you will need the details you noted earlier, I’ve left part of this image un-obfuscated. This is to just demonstrate that the project ID is, not the project number and that the application ID is the unique ID of the service account you noted down. The path provided must be the absolute path to the key file.

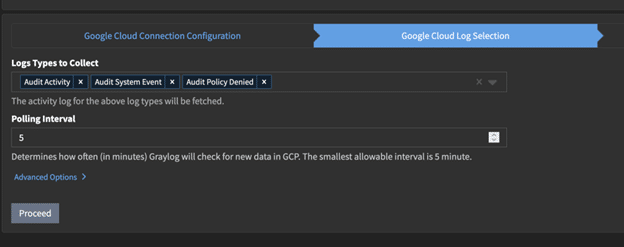

Select the Logs You Want

Next, you need to select the logs you wish to collect. Remember we explained include and exclude filters earlier? The values you choose in this selection will become include filters on the log sink within GCP.

This page also allows you to set the polling interval, or how often Graylog will check for new messages to collect. This cannot be any lower than 5 minutes.

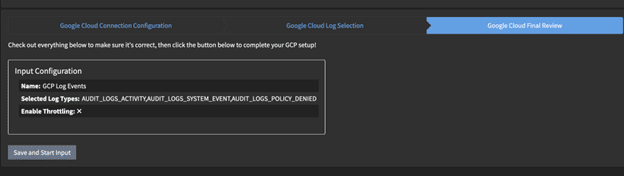

You now get a confirmation dialogue. If you are happy then you can go ahead and save and start the input.

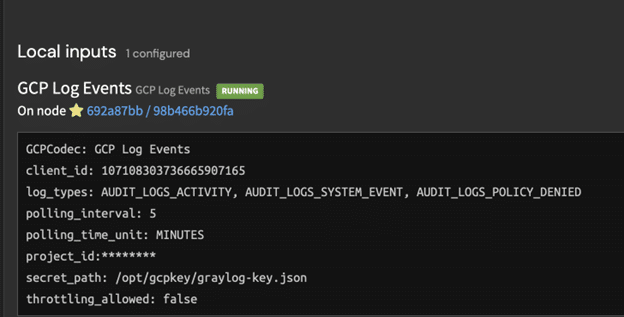

If all was set up correctly, up to this point, you should see the input running.

Note: if your account has resource restrictions in place that block US resources from being deployed, you will find the input in a failed state – more on this at the end of the blog.

Why do I not get events?

Ok so we haven’t quite finished yet, we need to jump back into GCP to do a bit of final configuration to make this work.

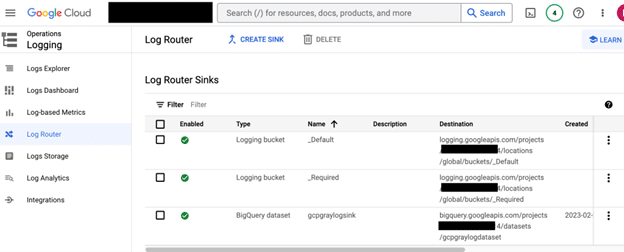

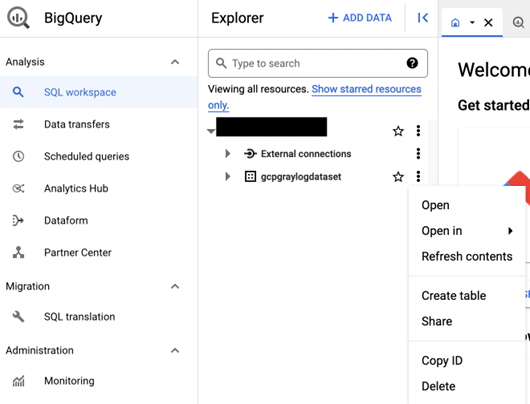

Back in the GCP console you should navigate to Logging>Log Router where you will see the log sinks for the organization. The one we are interested in is the newly created “gcpgraylogsink”

Click on the ellipses on the right of the sink and choose view sink details.

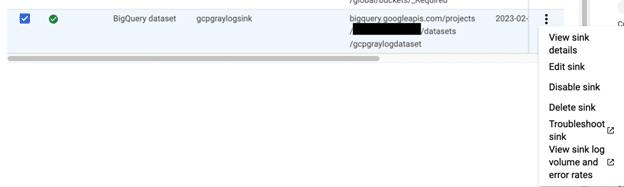

You will notice that the writer identity is not that of the service account we created earlier. This is not editable. Copy the service account name which in this case would be: “[email protected]” the “serviceAccount:” part is just a prefix. You can also view the inclusion filters from here now if you would like.

Note: if the writer’s identity is “none” or blank you should not need to complete the next few steps

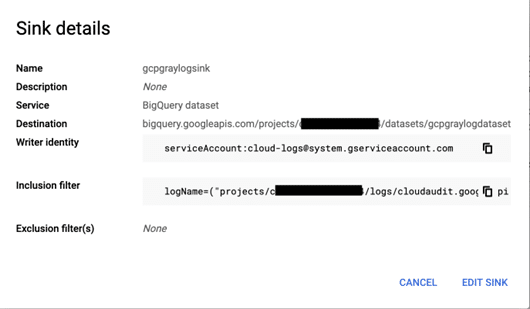

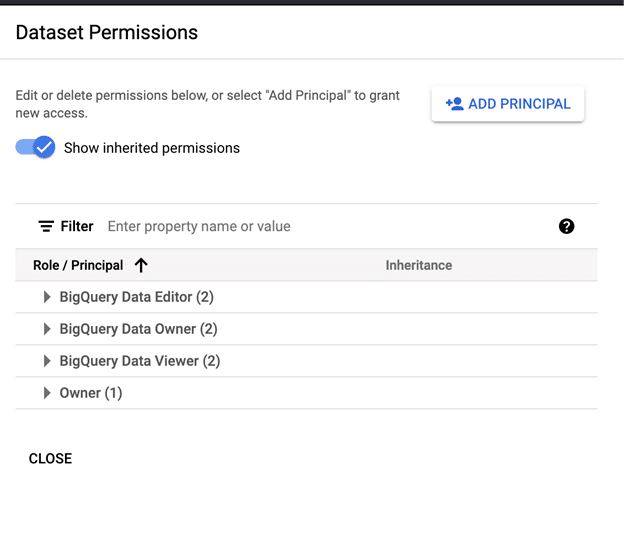

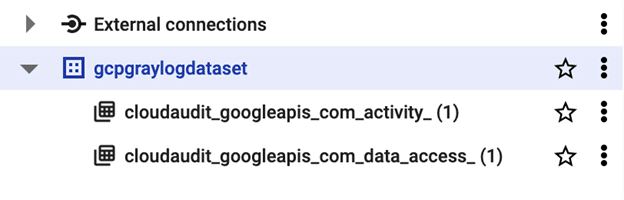

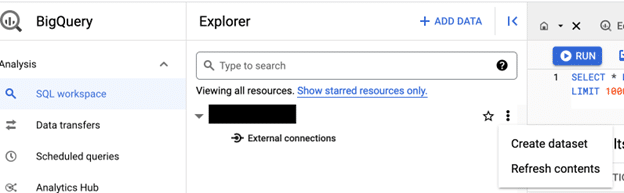

Now you need to go to the BigQuery SQL workspace within the console where you will see the “gcpgraylogdataset”. Notice there are no tables created? You should click on the ellipses to the right and then select the share option. We must assign the writer identity of the log sink to the dataset.

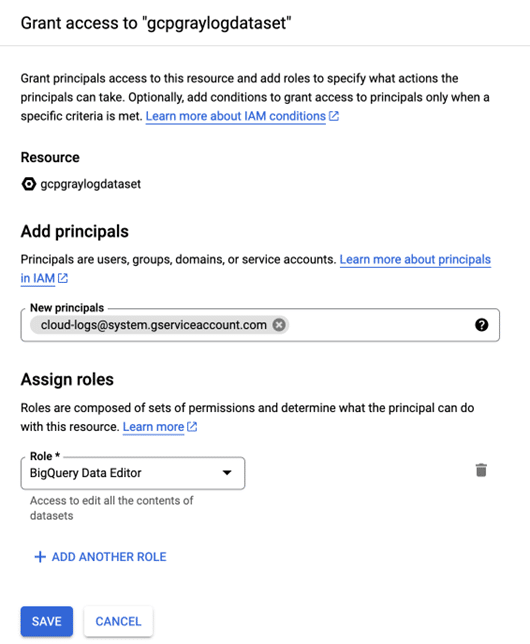

Select Add Principal.

Paste the writer identity into the principal and assign the BigQuery Data Editor role, save the change.

If you refresh the contents of the dataset, you will see new tables have been created.

You could even query these to verify new data is being written to the tables, for example:

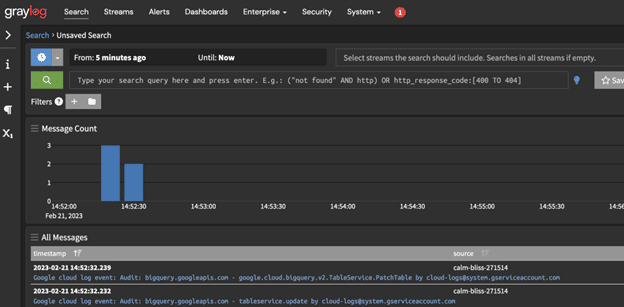

Ok so we now know that everything in GCP is working as expected, let’s now check Graylog.

Congratulations!

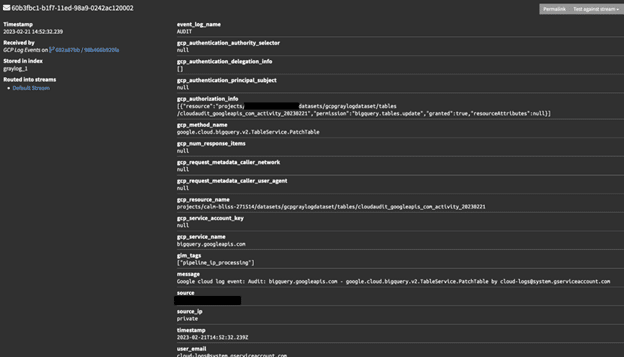

You should now see your log events within Graylog.

And the events are even parsed for you.

Deploying the BigQuery Dataset in another region.

I mentioned this several times through this blog, but one thing the input does not do today is allow you to deploy the BigQuery Dataset in another region. You may remember that the input checks if the dataset exists or not as part of the process. We can leverage this to deploy the dataset ahead of time in our desired region and then allow the input to set up all the other parts. You can set up the whole thing manually if you desire and then only give the service account the BigQuery roles and not the log configuration writer.

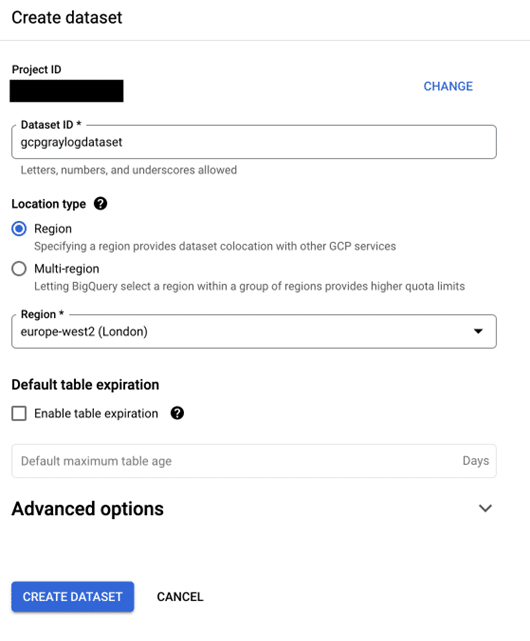

So essentially if you want to deploy this in another region you can simply go to the BigQuery SQL editor and under your project, select Create dataset.

From here make sure the Dataset ID is “gcpgraylogdataset”.

Create the dataset and then the same steps as earlier are needed to add the writer identity as a data editor of the BigQuery dataset.

Conclusions

So there we have it. This blog has provided a step-by-step on how to ingest your Google Cloud Audit Logs and also hopefully have a bit of a better understanding of how logging works within GCP at the same time.

Feel free to check out the official documentation (https://go2docs.graylog.org/5-0/getting_in_log_data/google_input.html) for this feature and what other logs the input supports.